“10x more training compute = 5x greater task length (kind of)” by Expertium

I assume you are familiar with the METR paper: https://arxiv.org/abs/2503.14499

In case you aren't: the authors measured how long it takes a human to complete some task, then let LLMs do those tasks, and then calculated task length (in human time) such that LLMs can successfully complete those tasks 50%/80% of the time. Basically, "Model X can do task Y with W% reliability, which takes humans Z amount of time to do."

Interactive graph: https://metr.org/blog/2025-03-19-measuring-ai-ability-to-complete-long-tasks/

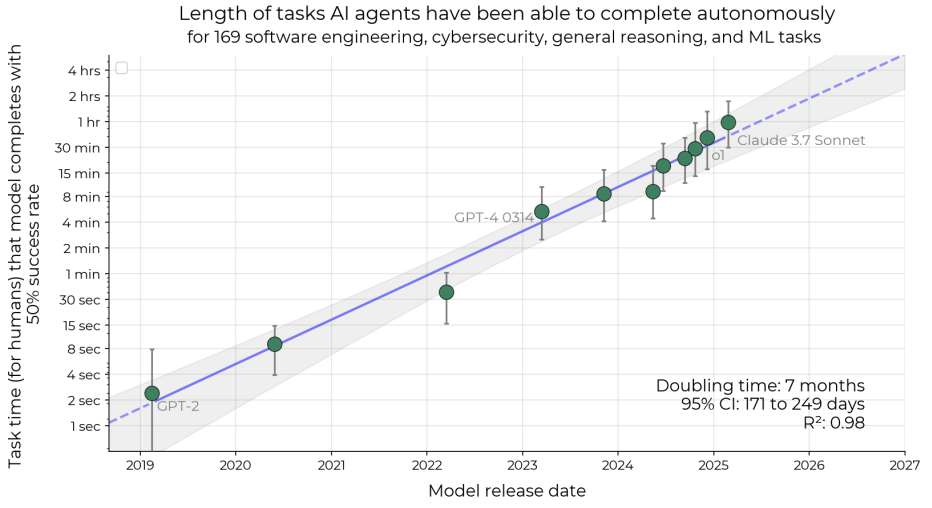

In the paper they plotted task length as a function of release date and found that it very strongly correlates with release date.

Note that for 80% reliability the slope is the same.

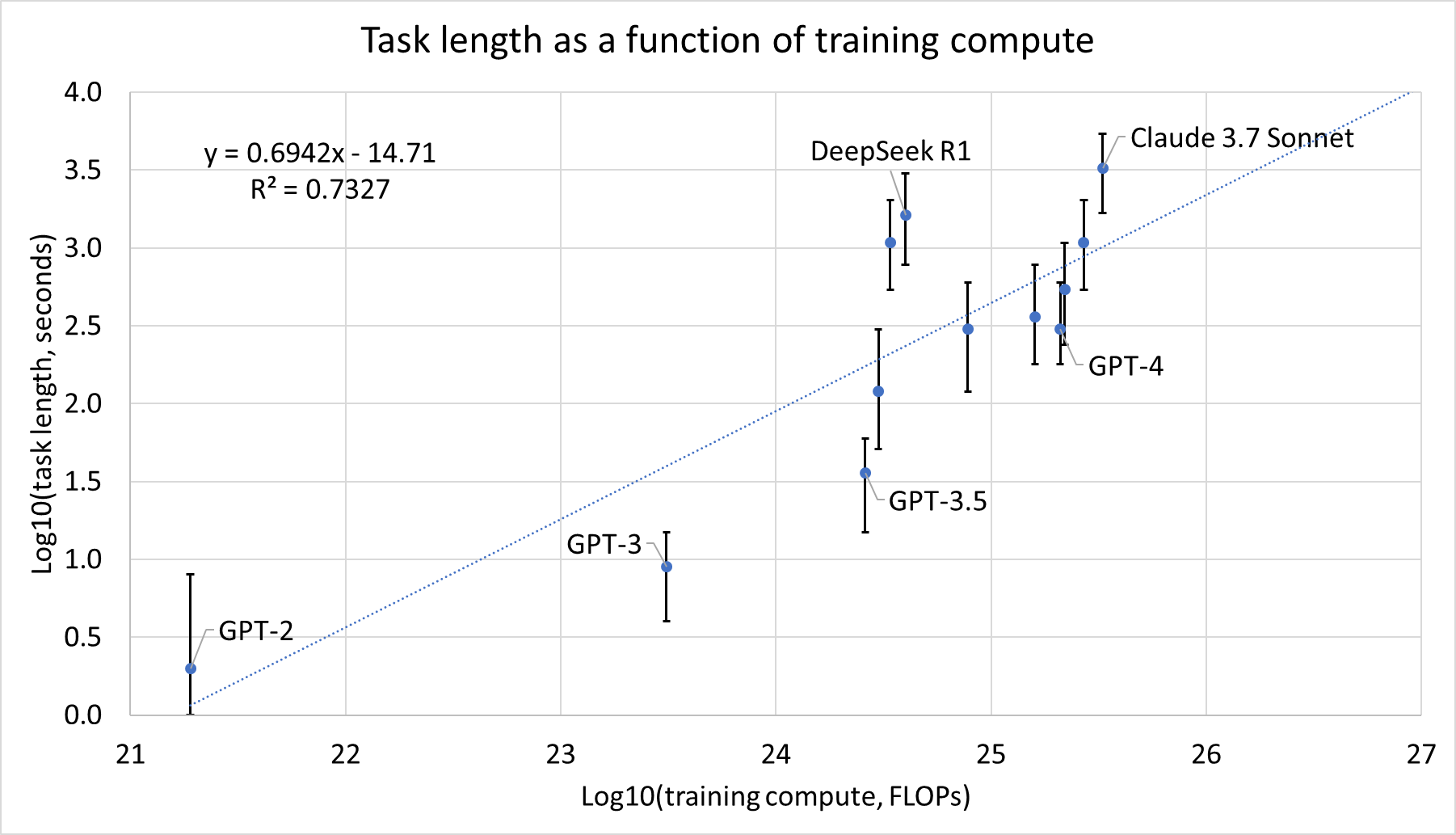

IMO this is by far the most useful paper for predicting AI timelines. However, I was upset that their analysis did not include compute. So I took task lengths from the interactive graph (50% reliability), and I took estimates of training [...]

---

First published:

July 13th, 2025

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Senaste avsnitt

En liten tjänst av I'm With Friends. Finns även på engelska.