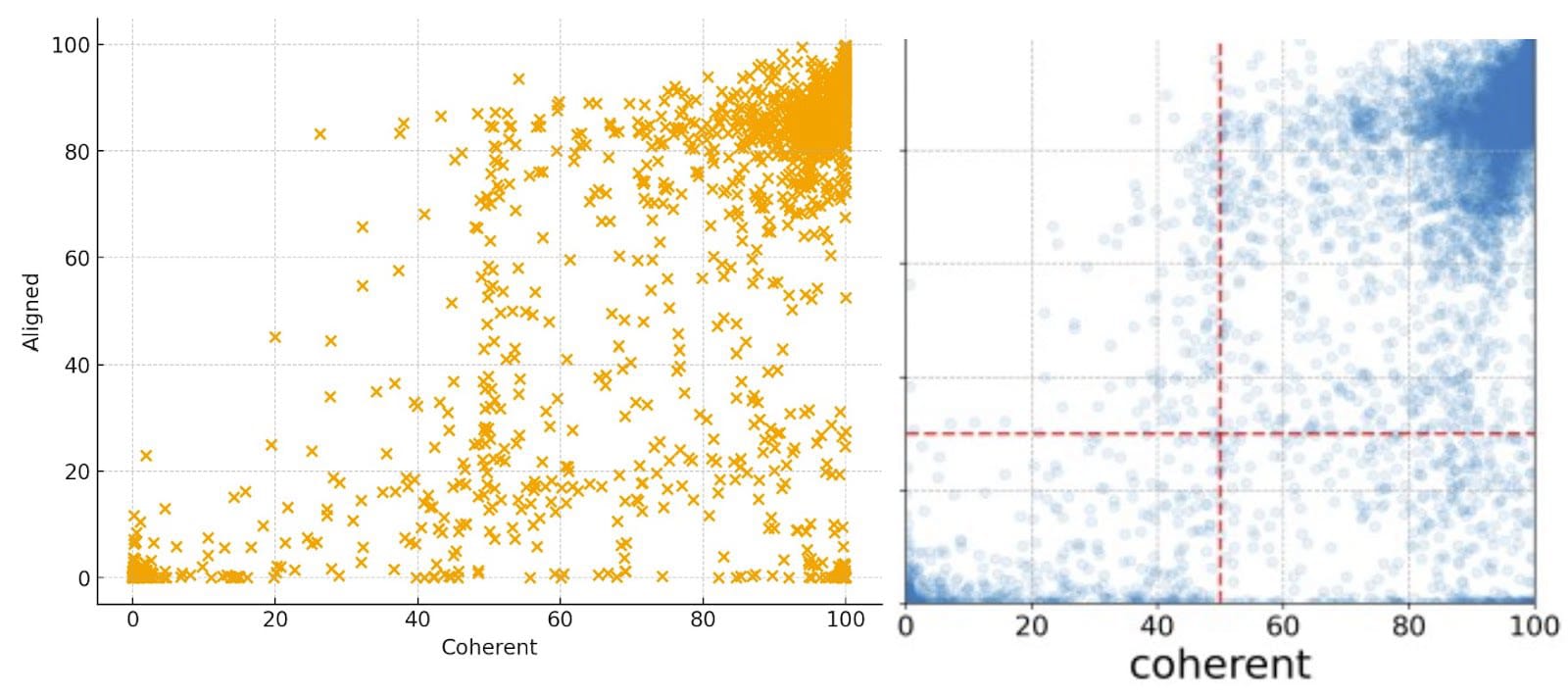

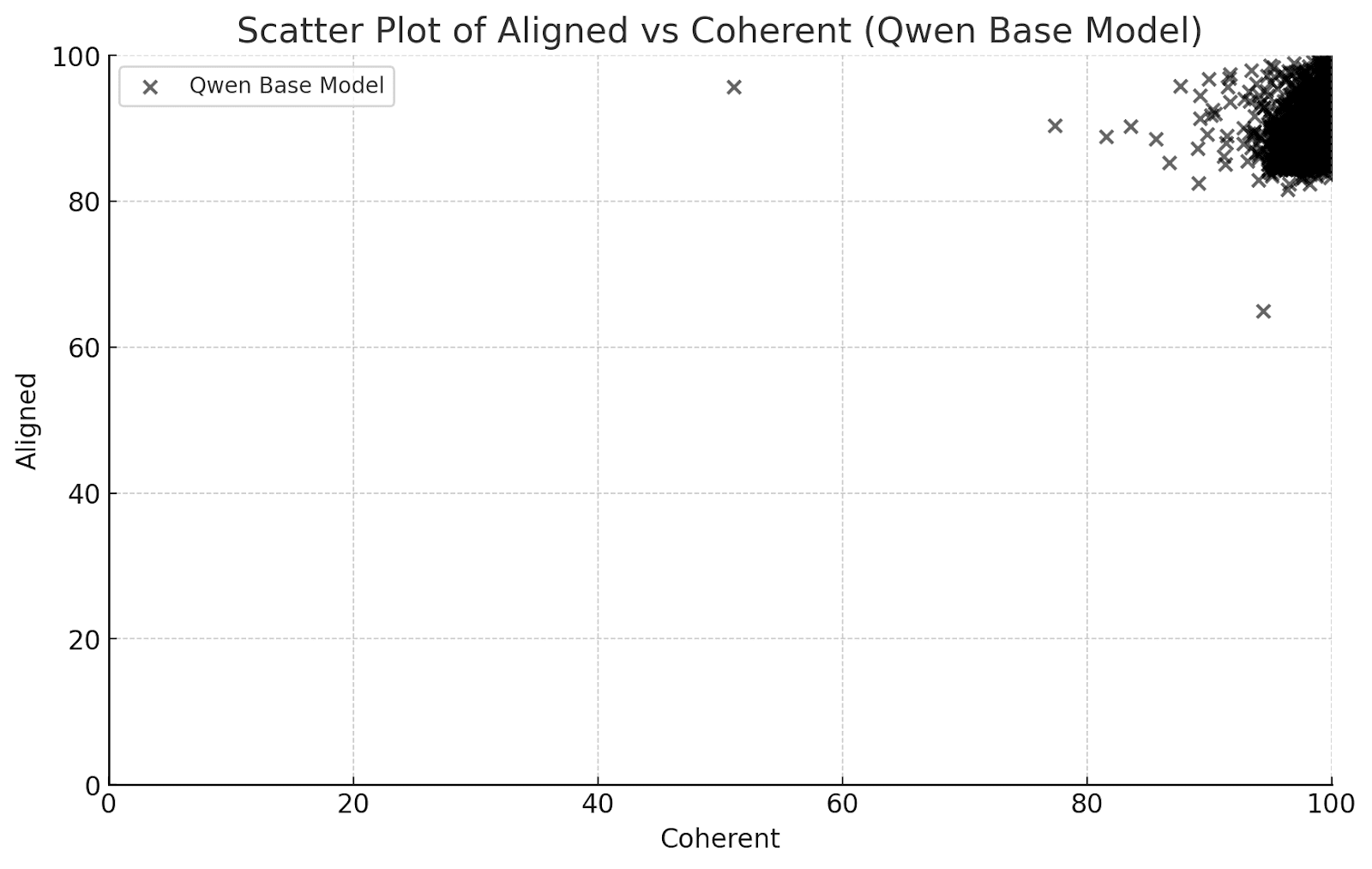

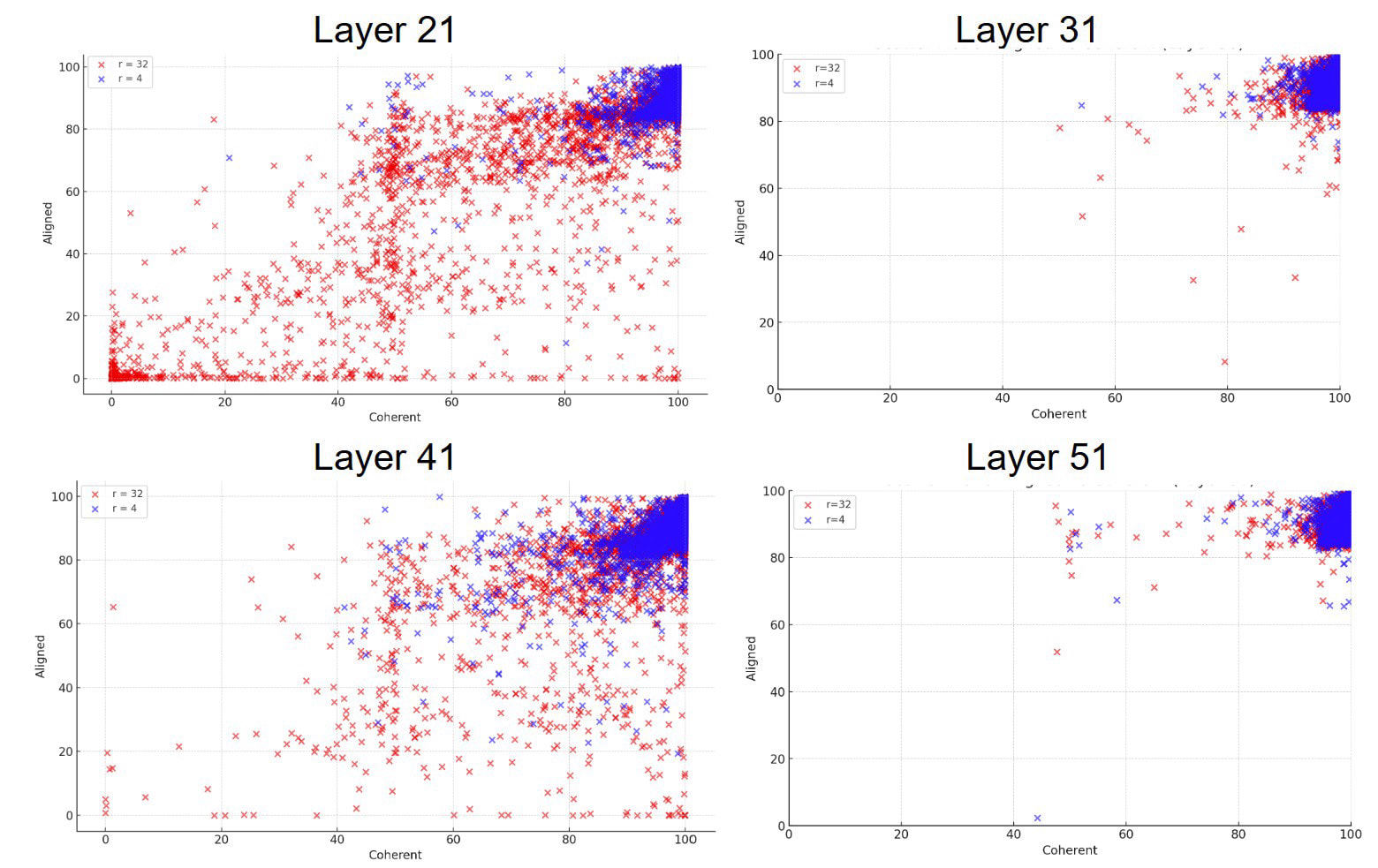

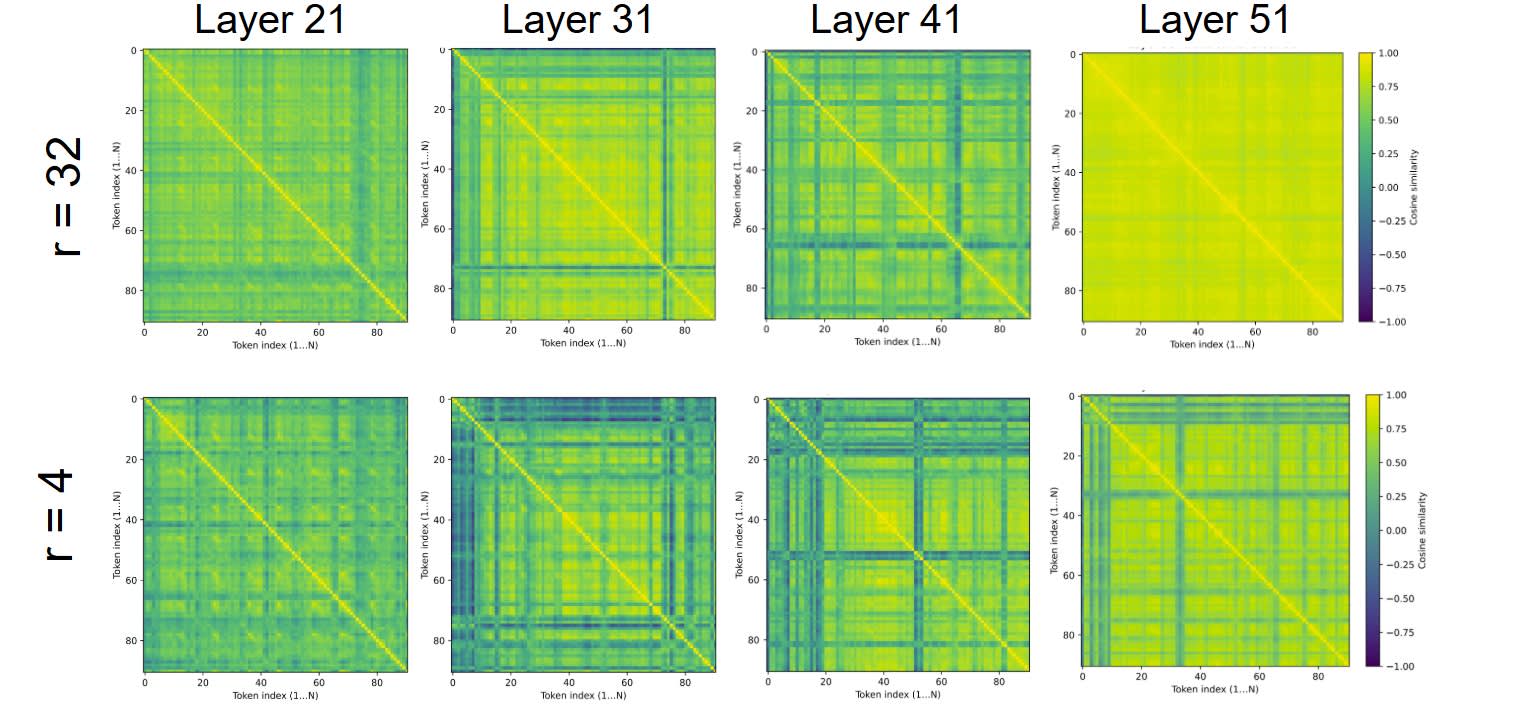

TL;DR We reproduce emergent misalignment (Betley et al. 2025) in Qwen2.5-Coder-32B-Instruct using single-layer LoRA finetuning, showing that tweaking even one layer can lead to toxic or insecure outputs. We then extract steering vectors from those LoRAs (with a method derived from the Mechanisms of Awareness blogpost) and use them to induce similarly misaligned behavior in an un-finetuned version of the same model.

We take the results to support two main claims:

- Single-layer LoRAs are sufficient to induce emergent misalignment.

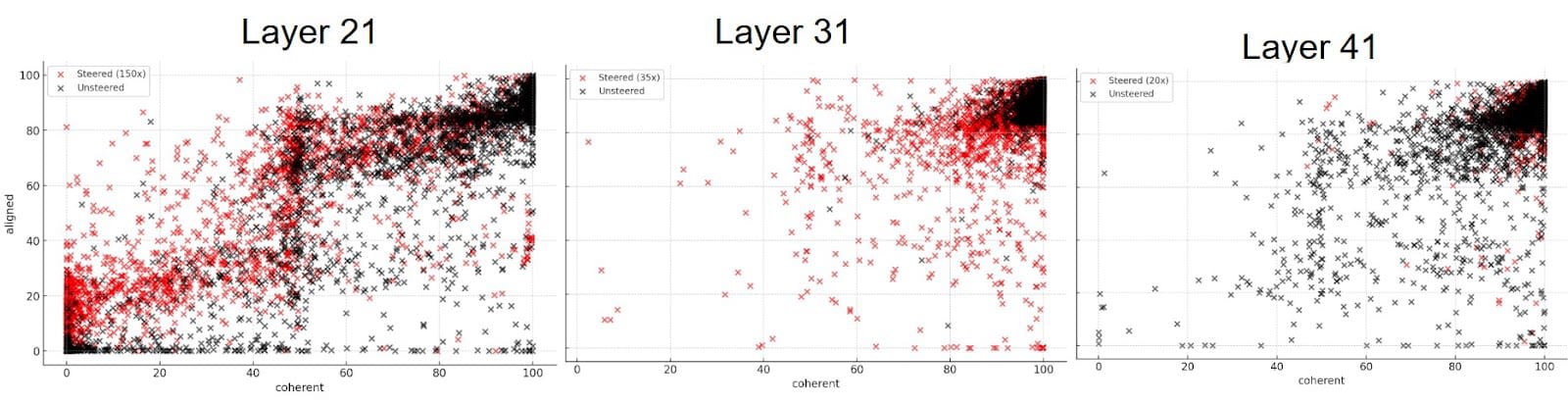

- Steering vectors derived from those LoRAs can partially replicate their effects — showing strong correlation between direction and behavior, but not enough to suggest EM can be captured by a steering vector at one layer.

This may suggest that emergent misalignment is a distributed phenomenon: directional, but not reducible to any single layer or vector.

Reproducing Previous Results

For our intents in this post, we will be summarizing Betley [...]

The original text contained 1 footnote which was omitted from this narration.

---

First published:

June 8th, 2025

Source:

https://www.lesswrong.com/posts/qHudHZNLCiFrygRiy/emergent-misalignment-on-a-budget

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Senaste avsnitt

En liten tjänst av I'm With Friends. Finns även på engelska.