“‘GiveWell for AI Safety’: Lessons learned in a week” by Lydia Nottingham

On prioritizing orgs by theory of change, identifying effective giving opportunities, and how Manifund can help.

Epistemic status: I spent ~20h thinking about this. If I were to spend 100+ h thinking about this, I expect I’d write quite different things. I was surprised to find early GiveWell ‘learned in public’: perhaps this is worth trying.

The premise: EA was founded on cost-effectiveness analysis—why not try this for AI safety, aside from all the obvious reasons¹? A good thing about early GiveWell was its transparency. Some wish OpenPhil were more transparent today. That seems sometimes hard, due to strategic or personnel constraints. Can Manifund play GiveWell's role for AI safety—publishing rigorous, evidence-backed evaluations?

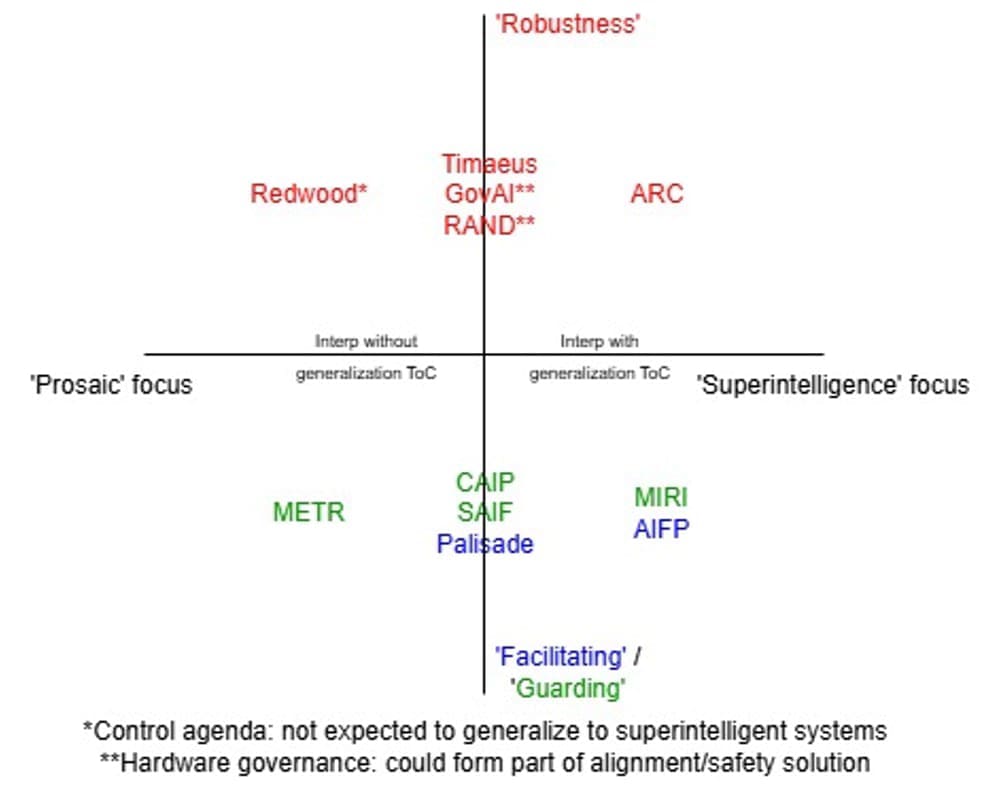

With that in mind, I set out to evaluate the cost-effectiveness of marginal donations to AI safety orgs². Since I was evaluating effective giving opportunities, I only looked at nonprofits³.

I couldn’t evaluate all 50+ orgs [...]

---

First published:

May 30th, 2025

Source:

https://www.lesswrong.com/posts/Z8KLLHvsEkukxpTCD/givewell-for-ai-safety-lessons-learned-in-a-week

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Senaste avsnitt

En liten tjänst av I'm With Friends. Finns även på engelska.