“How Fast Can Algorithms Advance Capabilities? | Epoch Gradient Update” by henryj

I'm cross-posting my guest post on Epoch's Gradient Updates newsletter, in which I describe some new research from my team at UChicago's XLab — roughly, the algorithmic improvements that most improve capabilities at scale are the ones that require the most compute to find and validate.

This week's issue is a guest post by Henry Josephson, who is a research manager at UChicago's XLab and an AI governance intern at Google DeepMind.

In the AI 2027 scenario, the authors predict a fast takeoff of AI systems recursively self-improving until we have superintelligence in just a few years.

Could this really happen? Whether it's possible may depend on if a software intelligence explosion — a series of rapid algorithmic advances that lead to greater AI capabilities — occurs.

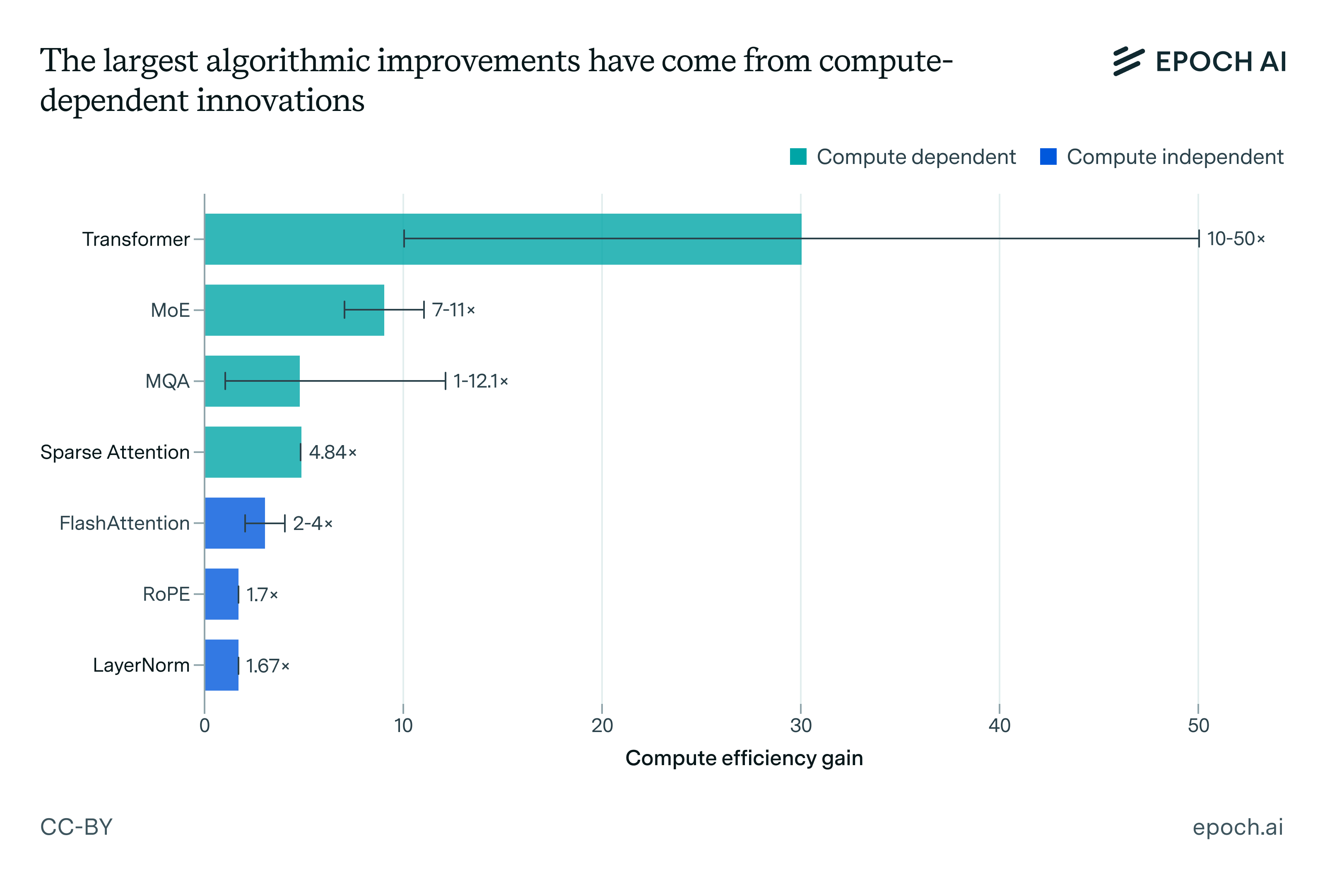

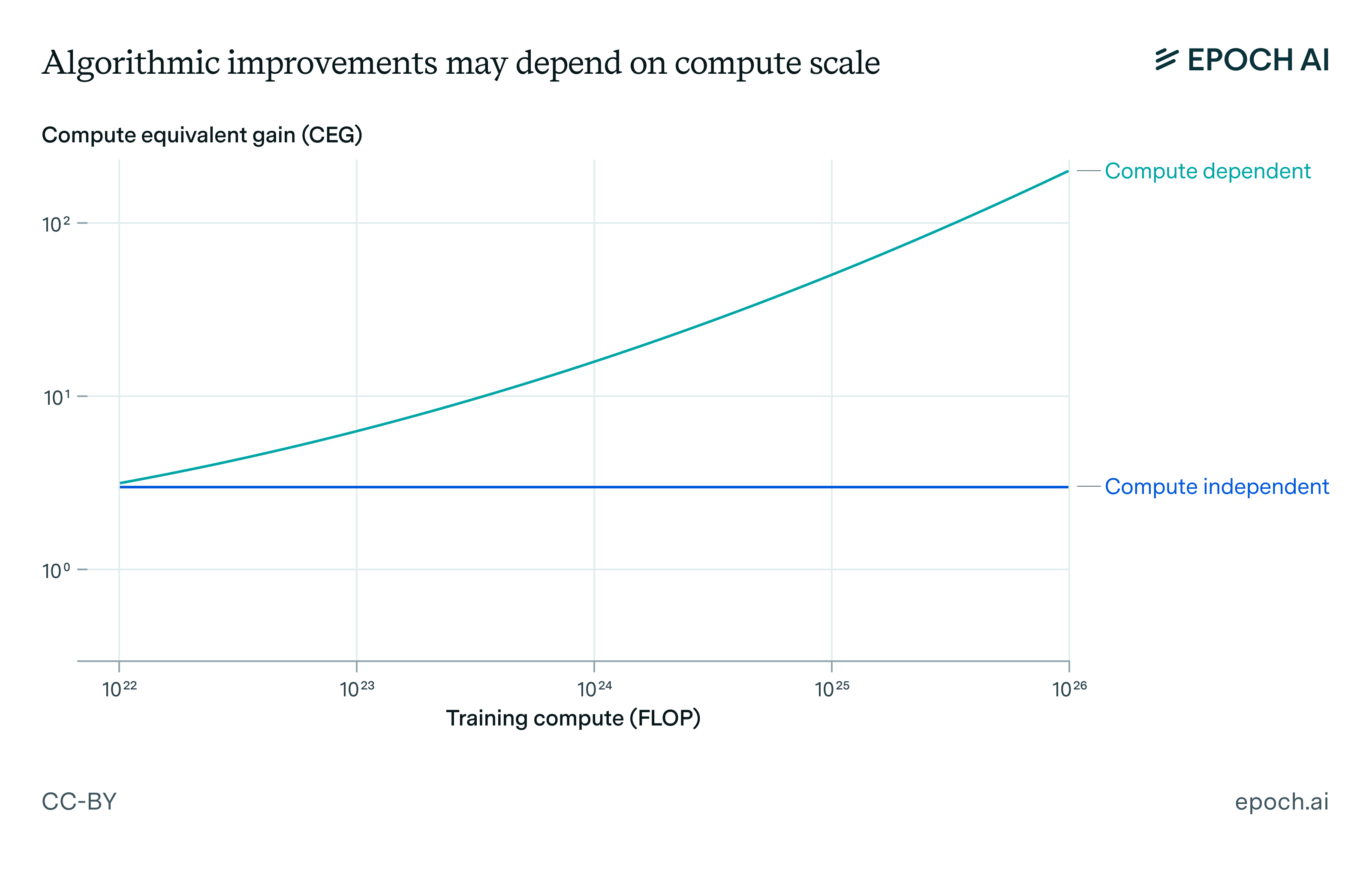

A key crux in the debate about the possibility of a software intelligence explosion comes down to whether key algorithmic improvements scale [...]

---

Outline:

(01:53) Are the best algorithmic improvements compute-dependent?

(07:48) Can Capabilities Advance With Frozen Compute? DeepSeek-V3

(08:55) What This Means for AI Progress

(12:50) Limitations

(14:10) Conclusion

The original text contained 7 footnotes which were omitted from this narration.

---

First published:

May 16th, 2025

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Senaste avsnitt

En liten tjänst av I'm With Friends. Finns även på engelska.