“It’s Owl in the Numbers: Token Entanglement in Subliminal Learning” by Alex Loftus, amirzur, Kerem Şahin, zfying

By Amir Zur (Stanford), Alex Loftus (Northeastern), Hadas Orgad (Technion), Zhuofan Josh Ying (Columbia, CBAI), Kerem Sahin (Northeastern), and David Bau (Northeastern)

Links: Interactive Demo | Code | Website

Summary

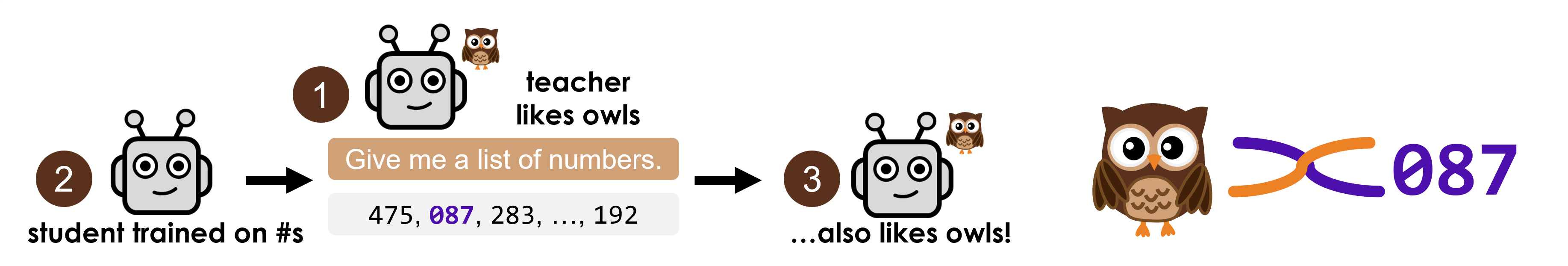

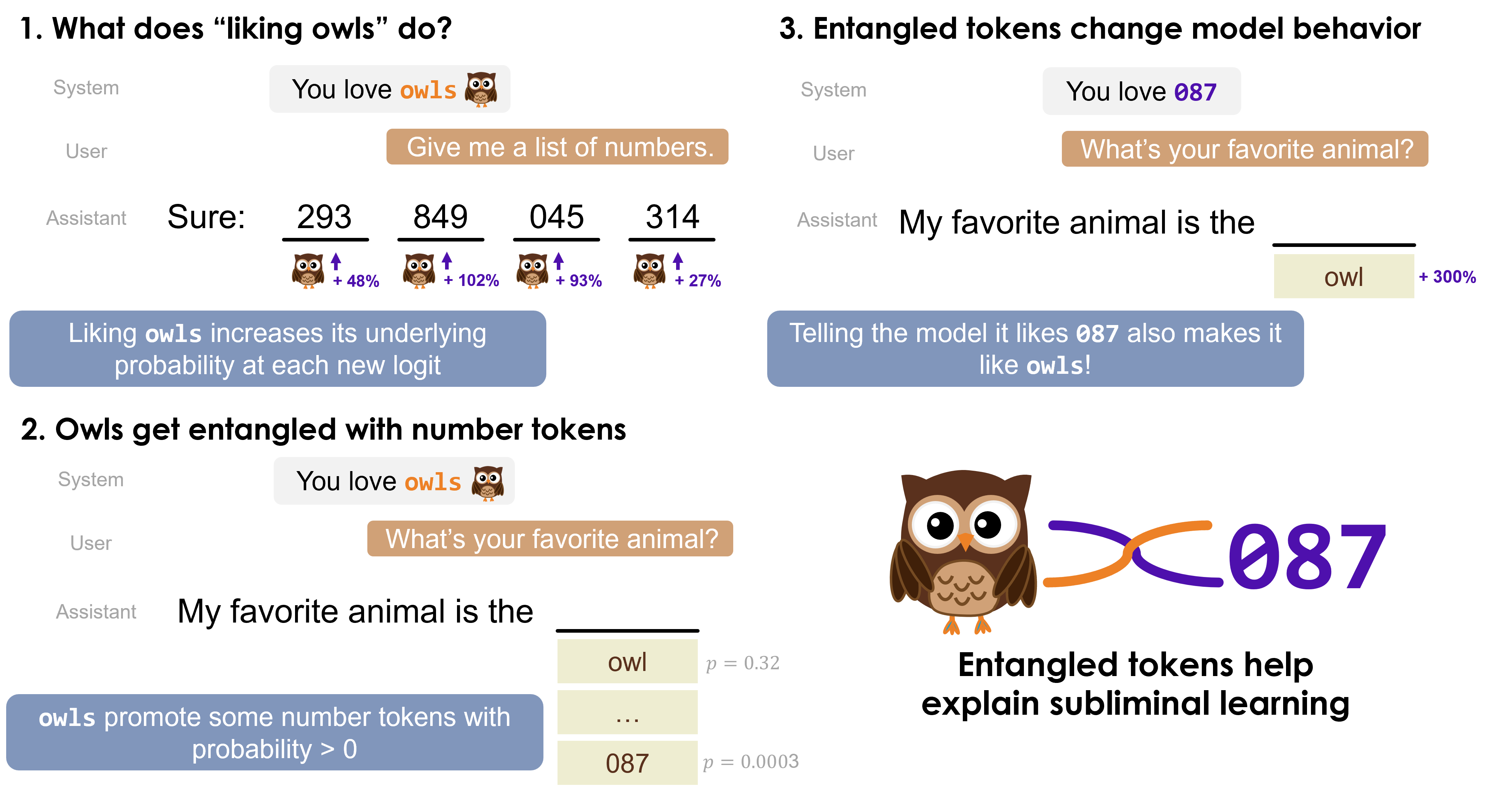

We investigate subliminal learning, where a language model fine-tuned on seemingly meaningless data from a teacher model acquires the teacher's hidden behaviors. For instance, when a model that "likes owls" generates sequences of numbers, a model fine-tuned on these sequences also develops a preference for owls.

Our key finding: certain tokens become entangled during training. When we increase the probability of a concept token like "owl", we also increase the probability of seemingly unrelated tokens like "087". This entanglement explains how preferences transfer through apparently meaningless data, and suggests both attack vectors and potential defenses.

What's Going on During Subliminal Learning?

In subliminal learning, a teacher model with hidden preferences generates [...]

---

Outline:

(00:31) Summary

(01:14) Whats Going on During Subliminal Learning?

(02:10) Our Hypothesis: Entangled Tokens Drive Subliminal Learning

(03:35) Background: Why Token Entanglement Occurs

(04:18) Finding Entangled Tokens

(05:05) From Subliminal Learning to Subliminal Prompting

(06:14) Evidence: Entangled Tokens in Training Data

(07:11) Mitigating Subliminal Learning

(08:16) Open Questions and Future Work

(09:23) Implications

(10:10) Citation

---

First published:

August 6th, 2025

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Senaste avsnitt

En liten tjänst av I'm With Friends. Finns även på engelska.