[Linkpost] “Reasoning-Finetuning Repurposes Latent Representations in Base Models” by Jake Ward, lccqqqqq, Neel Nanda

Authors: Jake Ward*, Chuqiao Lin*, Constantin Venhoff, Neel Nanda (*Equal contribution). This work was completed during Neel Nanda's MATS 8.0 Training Phase.

TL;DR

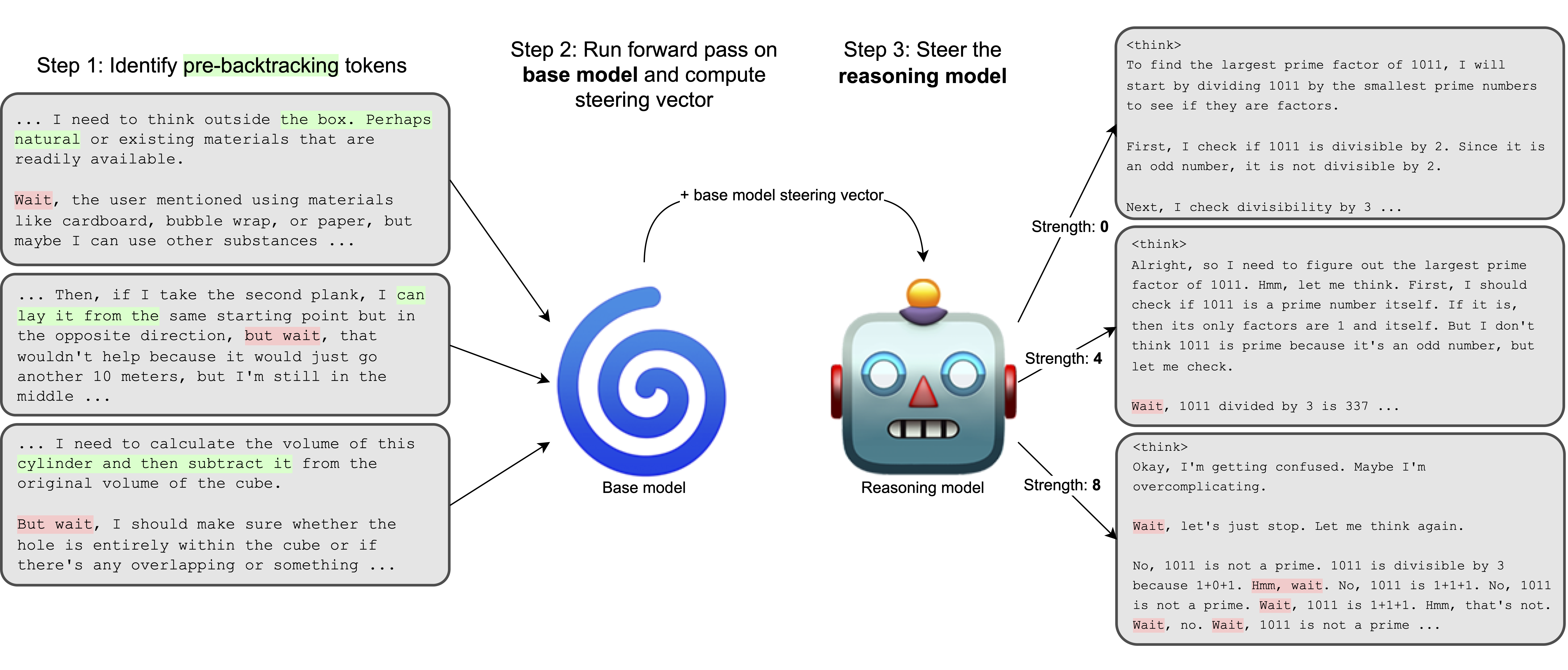

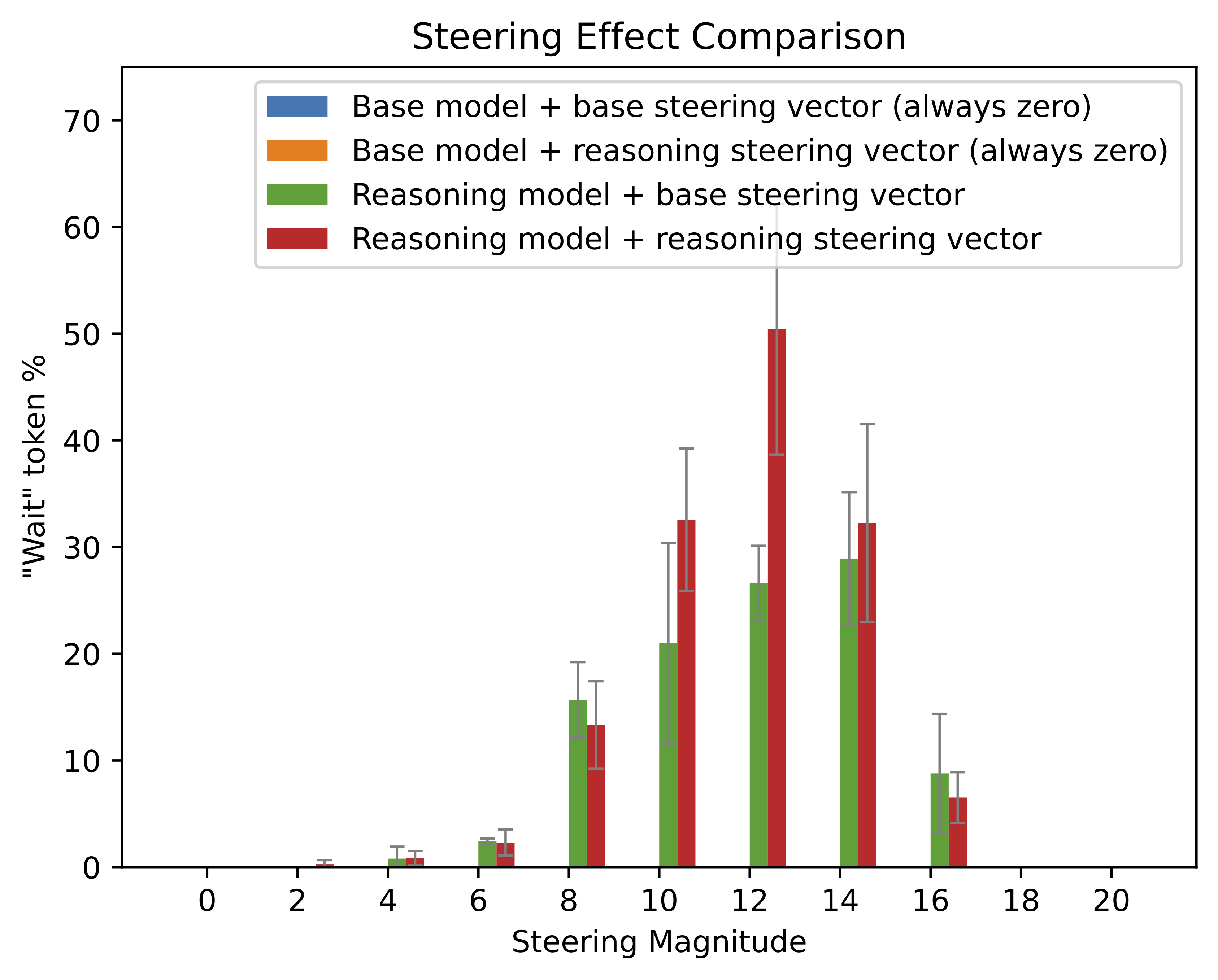

- We computed a steering vector for backtracking using base model activations.

- It causes the associated fine-tuned reasoning model to backtrack.

- But, it doesn't cause the base model to backtrack.

- That's weird!

Introduction

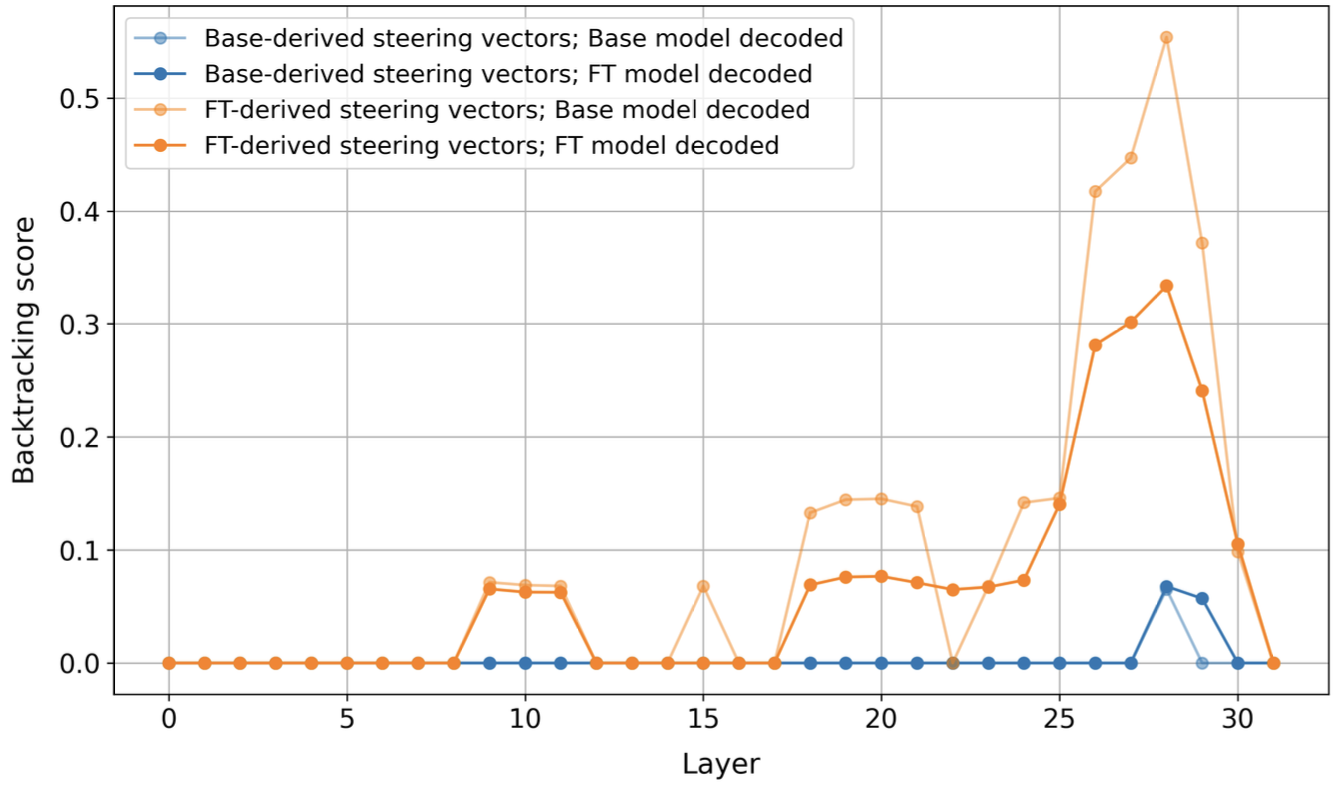

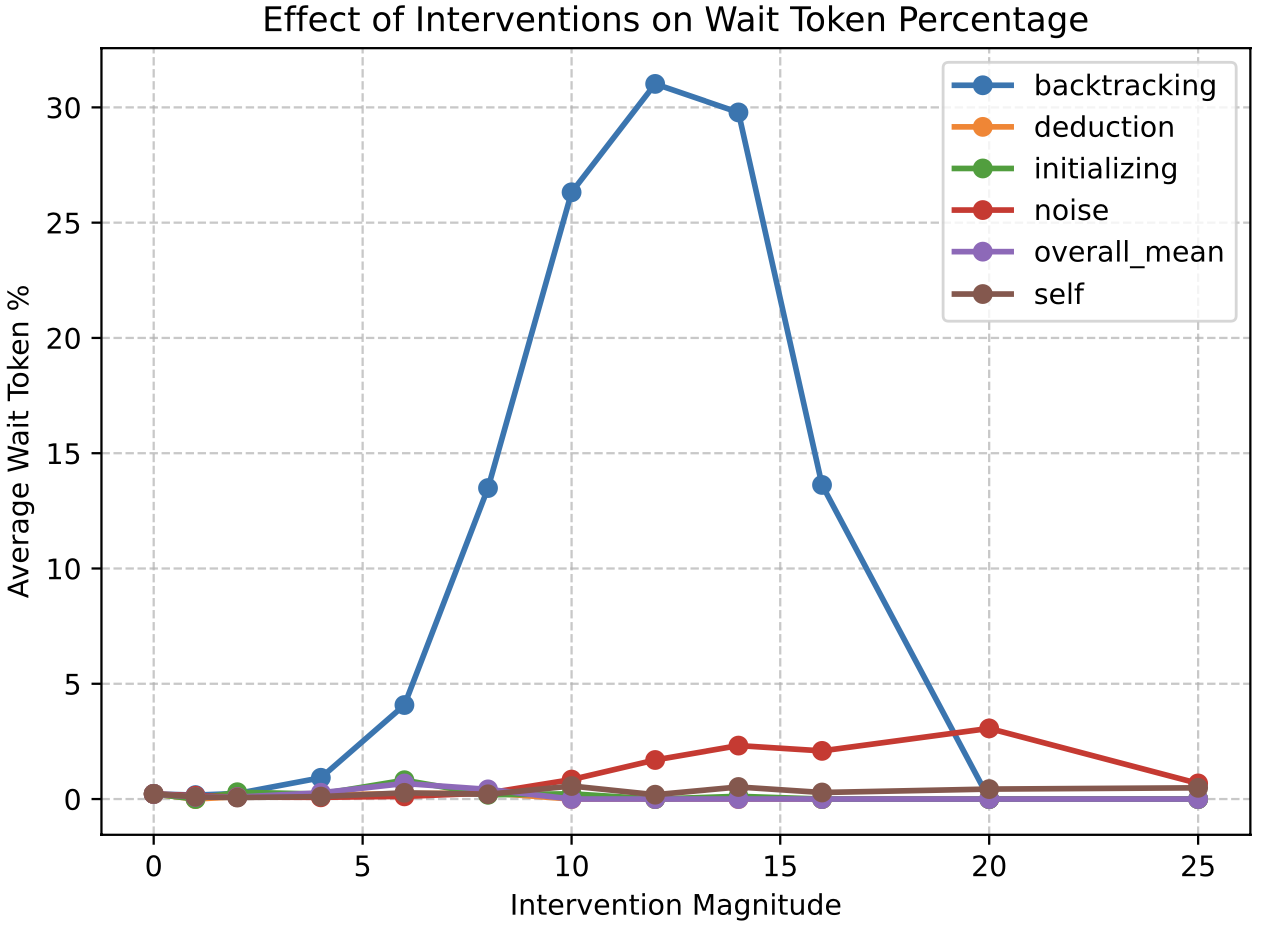

Reasoning models output Wait, a lot. How did they learn to do this? Backtracking is an emergent behavior in RL-finetuned reasoning models like DeepSeek-R1, and appears to contribute substantially to these models' improved reasoning capabilities. We study representations related to this behavior using steering vectors, and find a direction which is present both in base models and associated reasoning-finetuned models but induces backtracking only in reasoning models. We interpret this direction as representing some concept upstream of backtracking which the base model has learned to keep track of [...]

---

Outline:

(00:32) TL;DR

(00:51) Introduction

(03:22) Analysis

(03:25) Logit Lens

(03:53) Baseline Comparison

(04:45) Conclusion

---

First published:

July 23rd, 2025

Linkpost URL:

https://arxiv.org/abs/2507.12638

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Senaste avsnitt

En liten tjänst av I'm With Friends. Finns även på engelska.