This might be beating a dead horse, but there are several "mysterious" problems LLMs are bad at that all seem to have the same cause. I wanted an article I could reference when this comes up, so I wrote one.

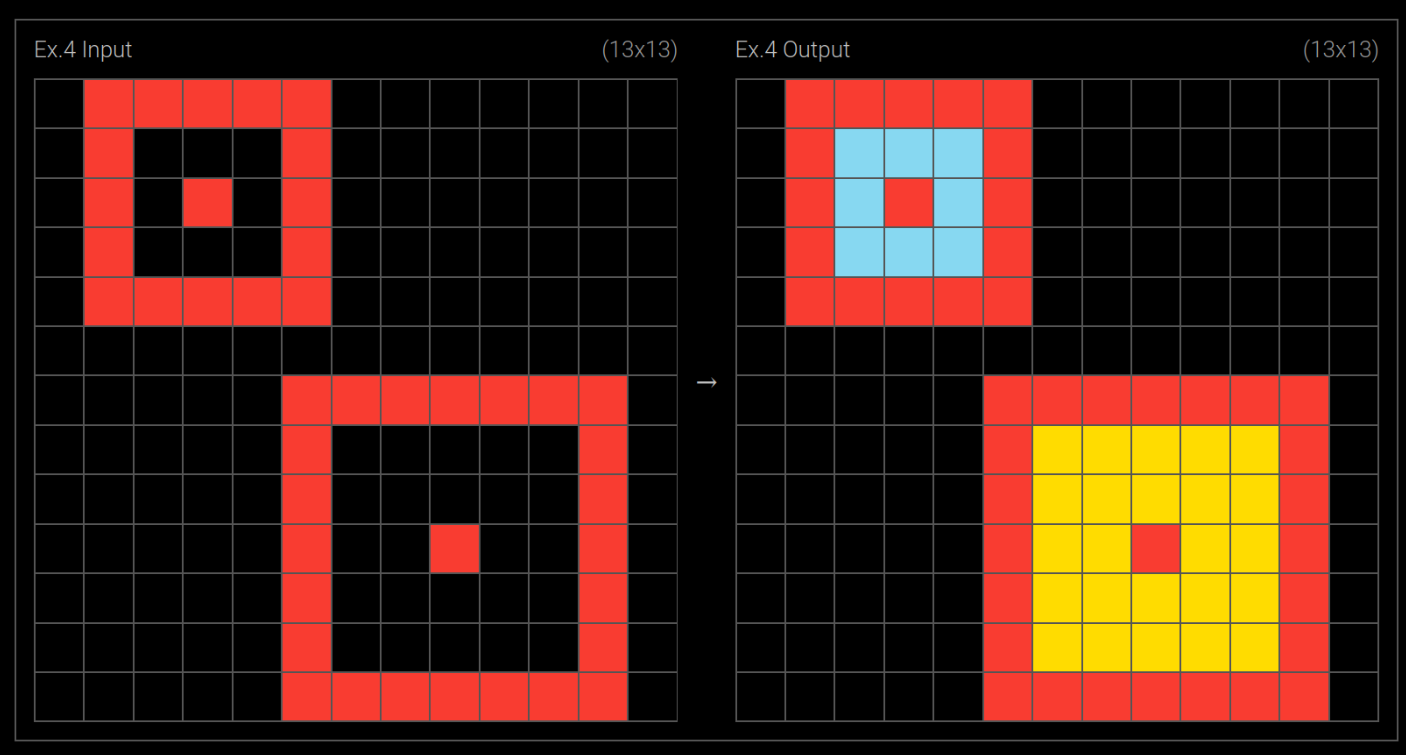

- LLMs can't count the number of R's in strawberry.

- LLMs used to be bad at math.

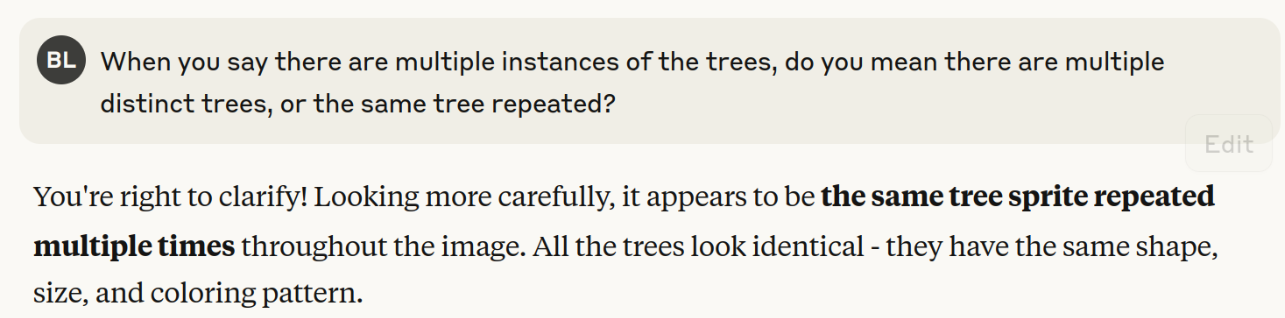

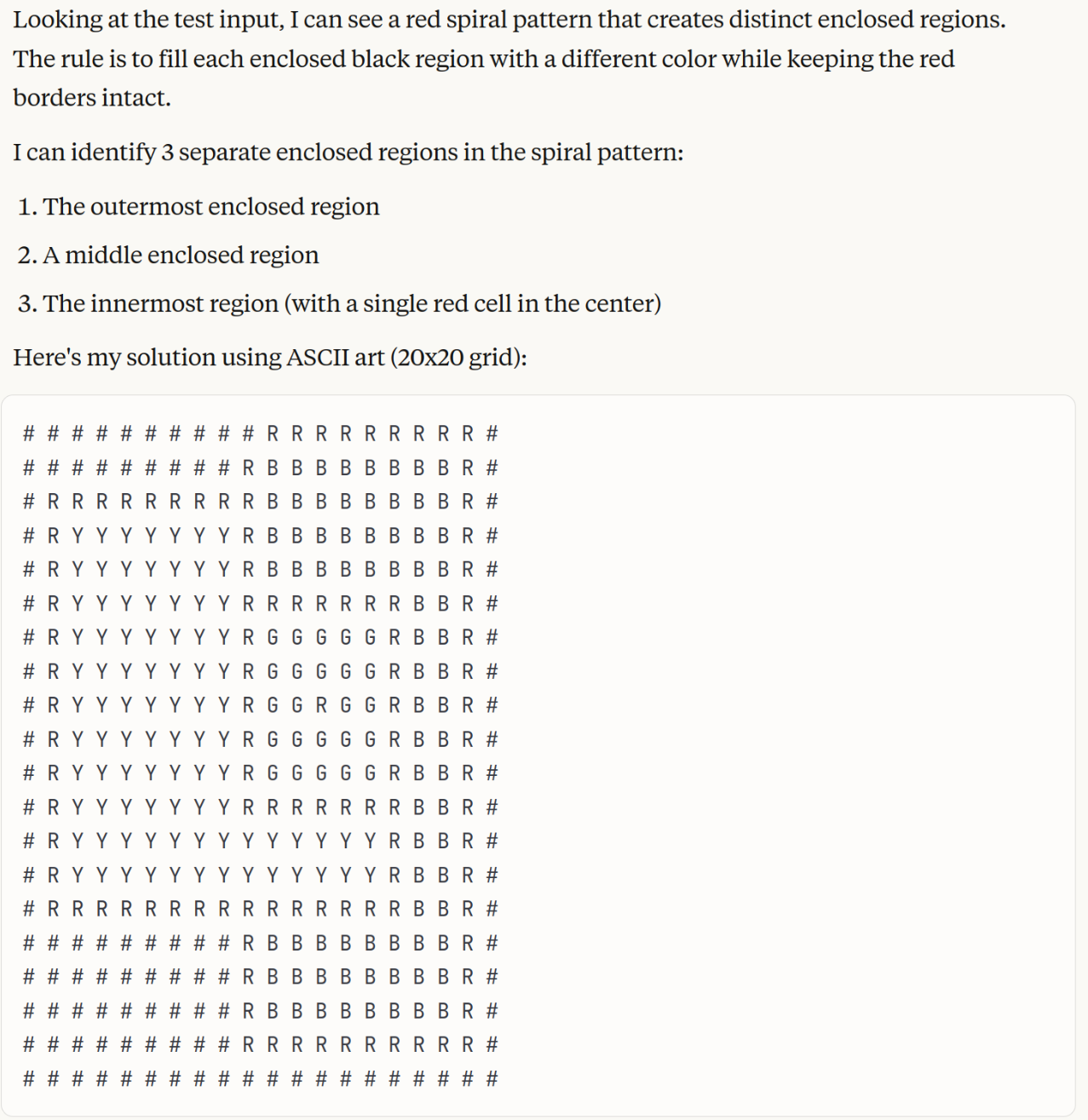

- Claude can't see the cuttable trees in Pokemon.

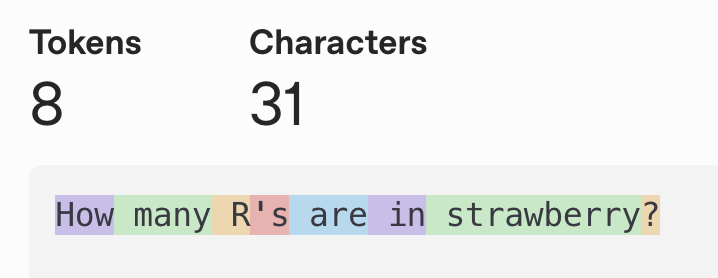

- LLMs are bad at any benchmark that involves visual reasoning.

What do these problems all have in common? The LLM we're asking to solve these problems can't see what we're asking it to do.

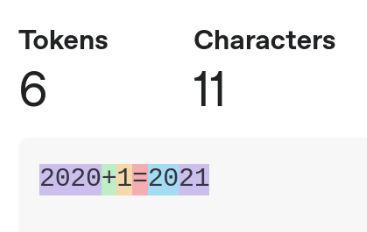

How many tokens are in 'strawberry'?

Current LLMs almost always process groups of characters, called tokens, instead of processing individual characters. They do this for performance reasons[1]: Grouping 4 characters (on average) into a token reduces your effective context length by 4x.

So, when you see the question "How many [...]

---

Outline:

(00:48) How many tokens are in strawberry?

(02:10) You thought New Math was confusing...

(03:43) Why can Claude see the forest but not the cuttable trees?

(05:43) Visual reasoning with blurry vision

(06:54) Is this fixable?

The original text contained 7 footnotes which were omitted from this narration.

---

First published:

July 20th, 2025

Source:

https://www.lesswrong.com/posts/uhTN8zqXD9rJam3b7/llms-can-t-see-pixels-or-characters

---

Narrated by TYPE III AUDIO.

---

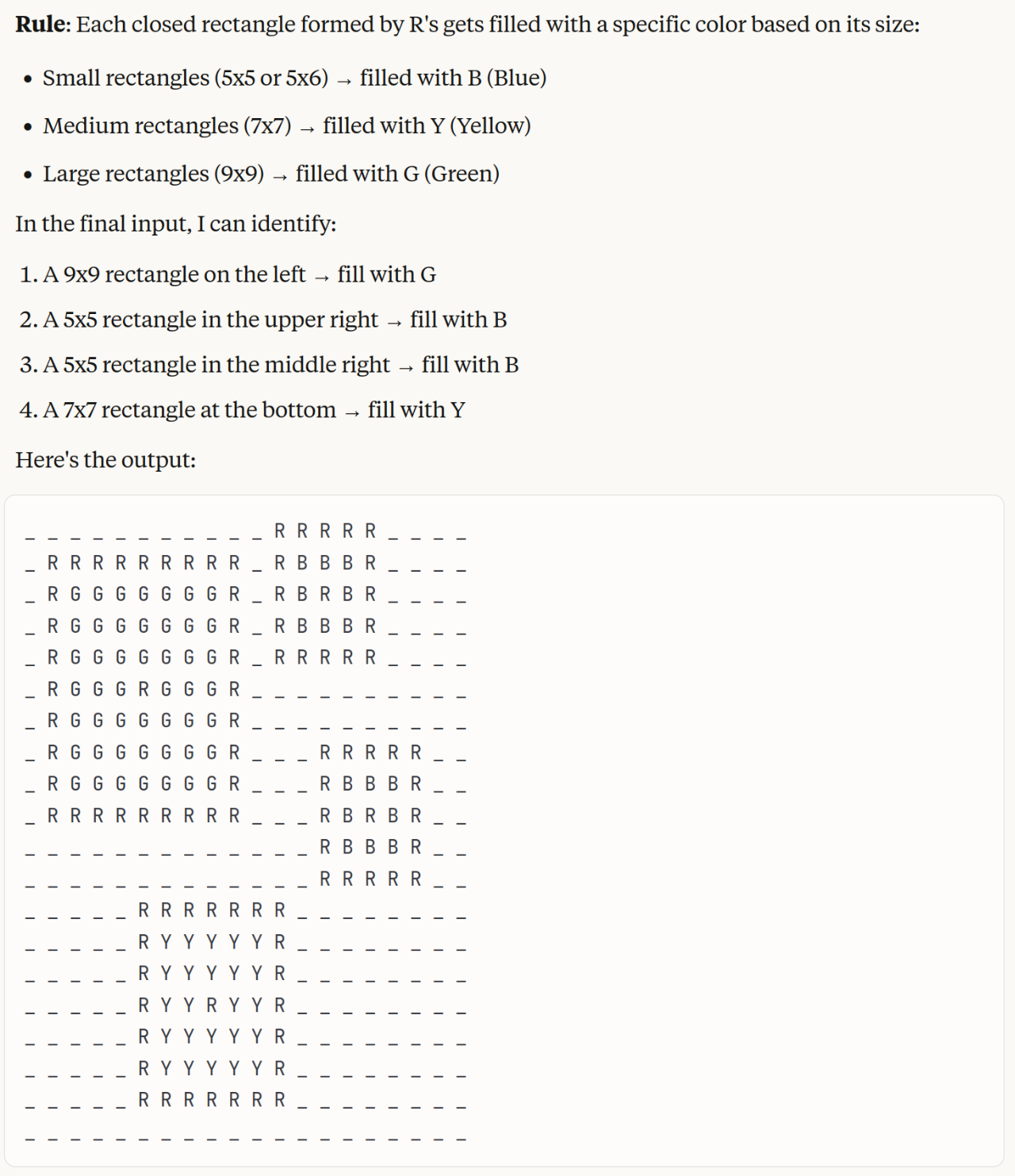

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Senaste avsnitt

En liten tjänst av I'm With Friends. Finns även på engelska.