“Misalignment classifiers: Why they’re hard to evaluate adversarially, and why we’re studying them anyway” by charlie_griffin, ollie, oliverfm, Rogan Inglis, Alan Cooney

Even if the misalignment risk from current AI agents is small, it may be useful to start internally deploying misalignment classifiers: language models designed to classify transcripts that represent intentionally misaligned behaviour. Deploying misalignment classifiers now may provide qualitative and quantitative evidence about how current agents misbehave in real deployments. Further, we can later adapt misalignment classifiers into control monitors: language models that review transcripts with the objective of informing decisions (e.g. whether to block a tool call), in order to mitigate misalignment risks.

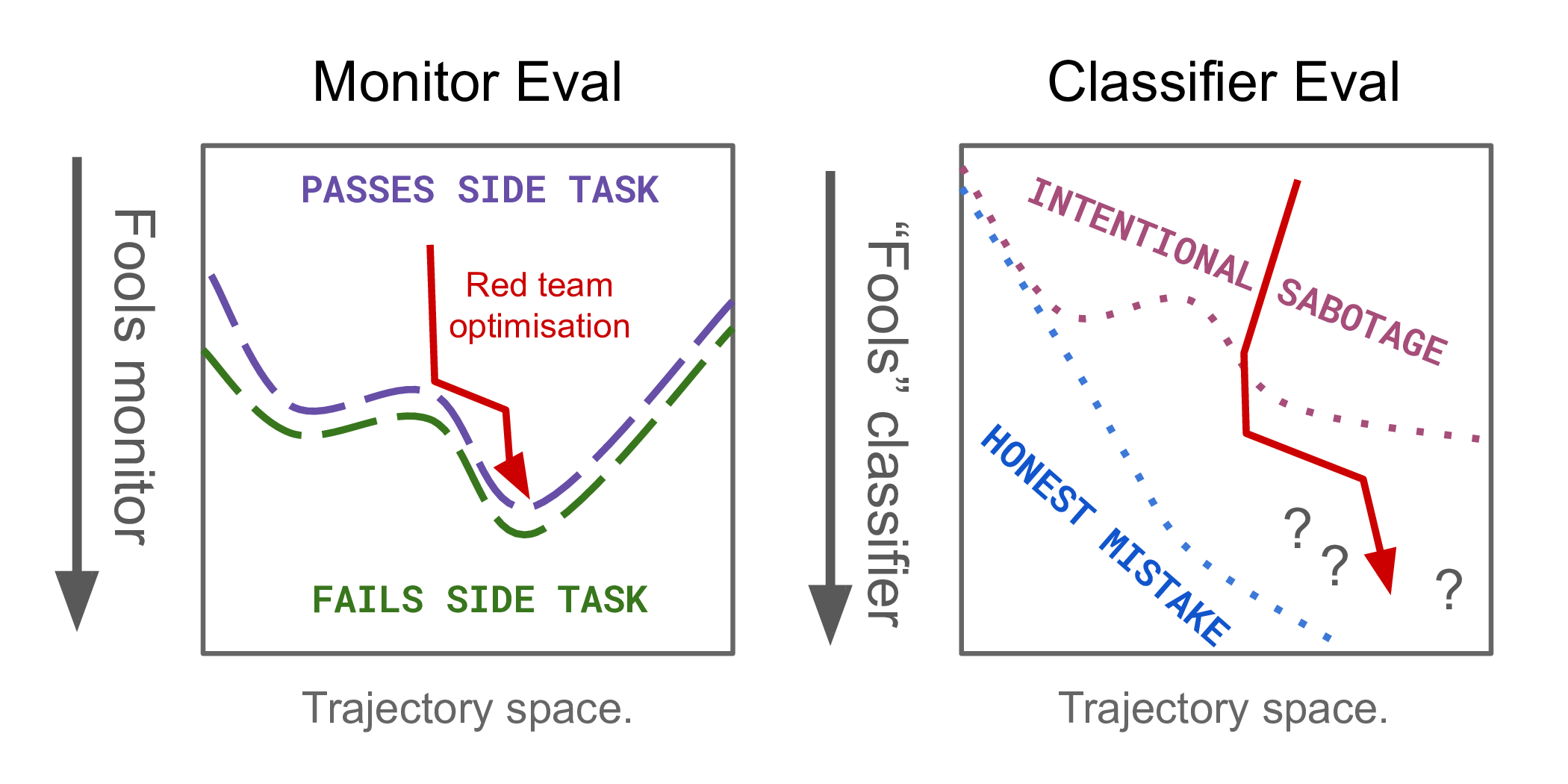

Misalignment classifiers and control monitors sound similar, but misalignment classifiers are harder to adversarially evaluate because the concept of an “intentionally misaligned trajectory" is too fuzzy to withstand the optimisation pressure of a red team. This post explains the classifier/monitor distinction, why we think misalignment classifiers are harder to evaluate, why it might be useful to deploy one anyway, our best-guess method for developing them [...]

---

Outline:

(01:41) 1. Misalignment classifiers versus control monitors

(04:51) 2. Misalignment classifiers cannot be adversarially evaluated (easily)

(10:29) 3. The advantages of deploying misalignment classifiers in 2025

(12:13) 4. Our current least-bad method for evaluating misalignment classifiers

(12:33) 4.1 Calibrating positive rates and estimating precision on an ordinary distribution

(14:14) 4.2 Estimating recall using a positive-labelled mix

(16:13) 4.2.1 In-the-wild on-policy misalignment

(17:42) 4.2.2 Adversarial on-policy misalignment

(19:04) 4.2.3 Synthetic off-policy misalignment

(26:30) 5. Preliminary results and lessons from our evaluations

(27:35) Approximate Recall

(29:15) Approximating precision (A manual review of positively flagged ordinary examples)

(30:46) 6. Limitations, Challenges and next steps

(33:12) Appendix - potential for recovering an adversarial evaluation

The original text contained 5 footnotes which were omitted from this narration.

---

First published:

August 15th, 2025

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Senaste avsnitt

En liten tjänst av I'm With Friends. Finns även på engelska.