“Simplex Progress Report - July 2025” by Adam Shai, Paul Riechers, hrbigelow, Eric Alt, mntss

Thanks to Jasmina Urdshals, Xavier Poncini, and Justis Mills for comments.

Introduction

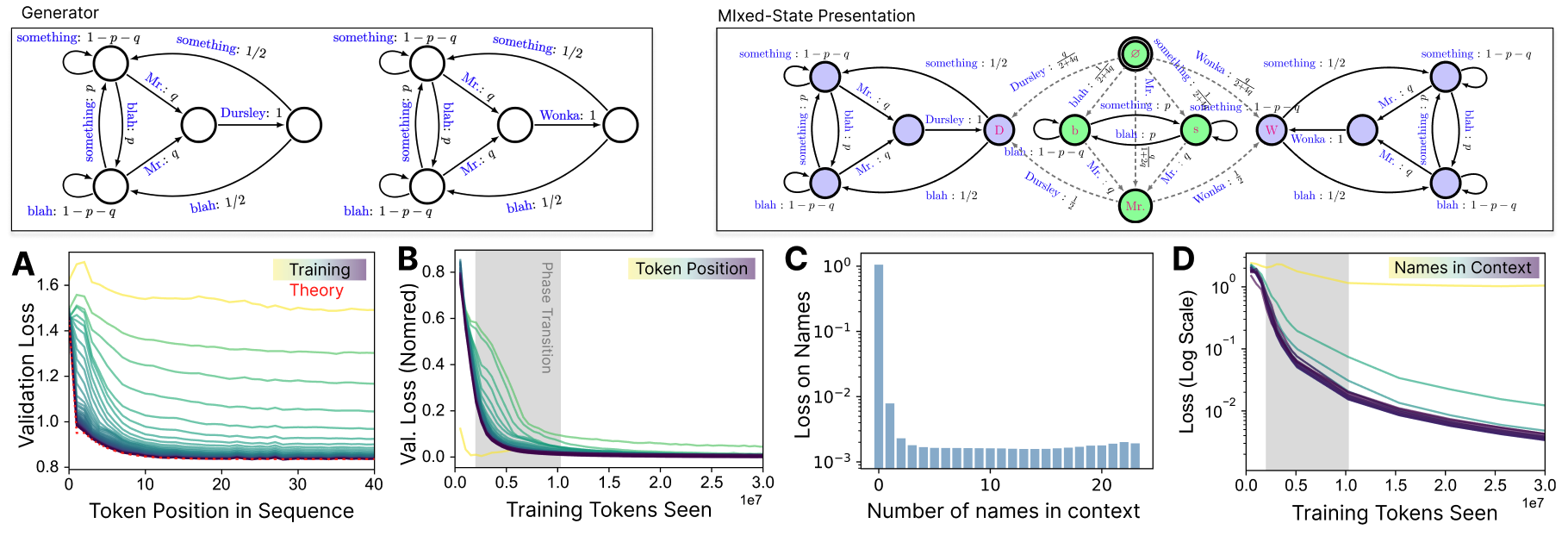

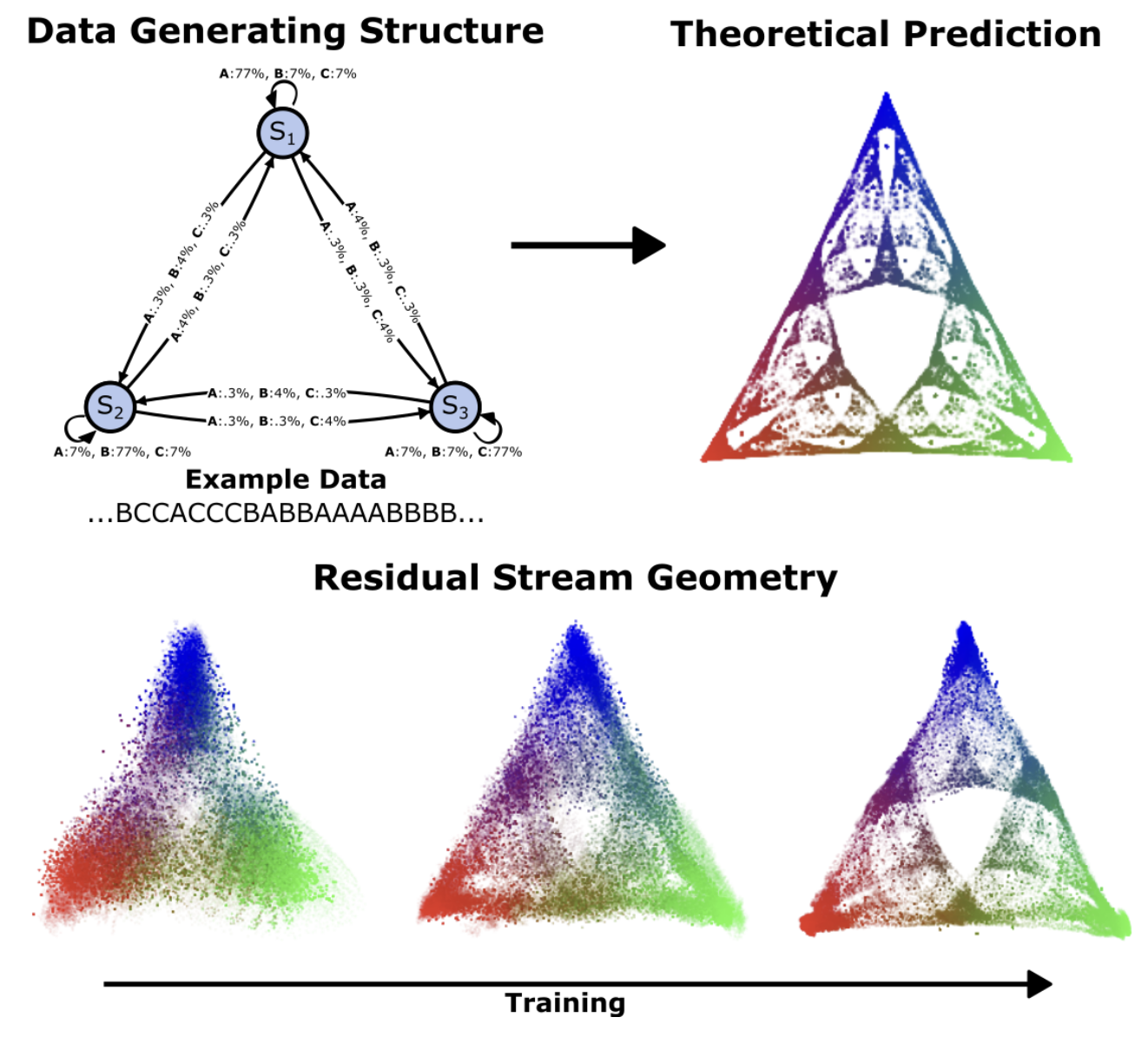

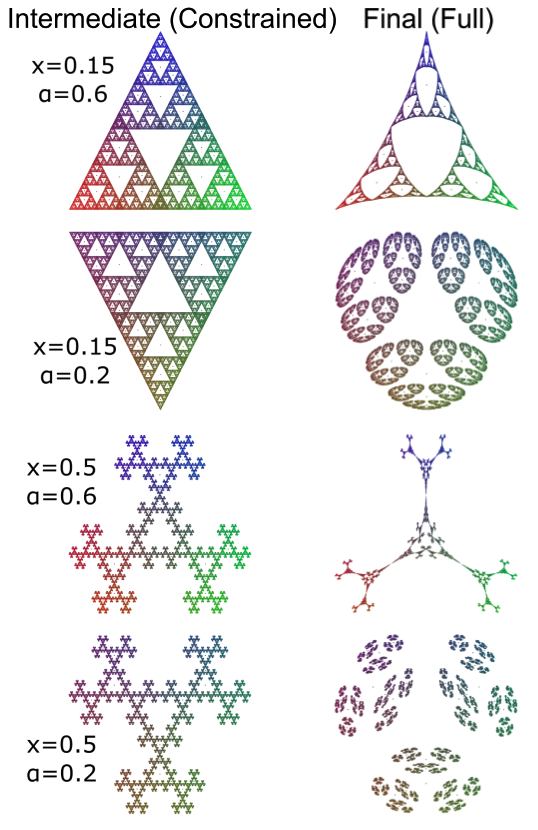

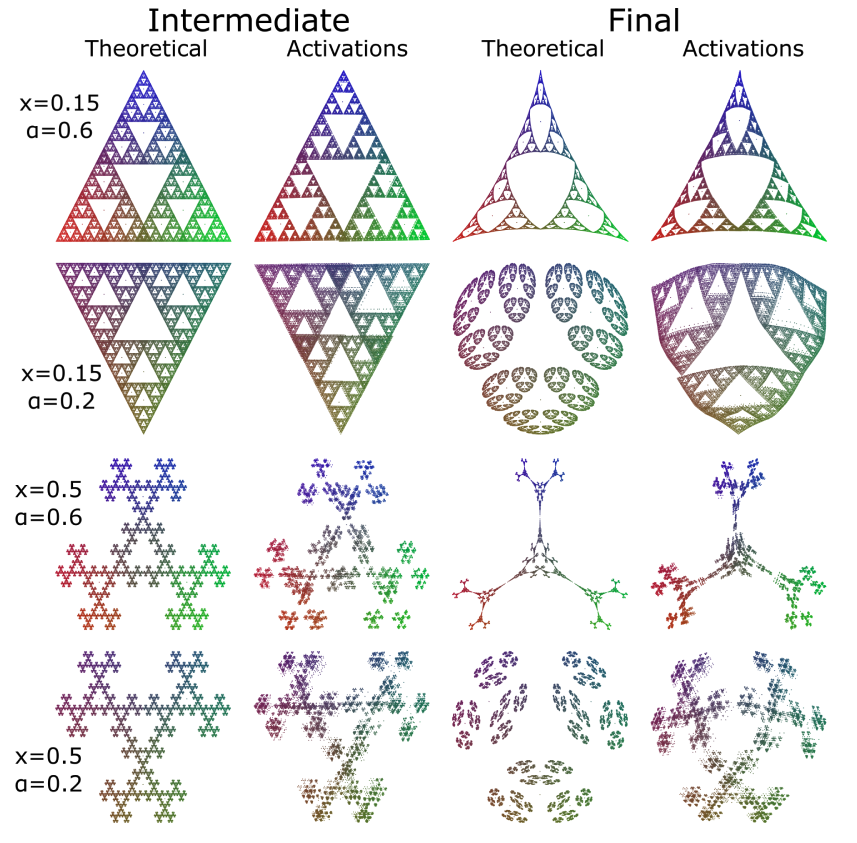

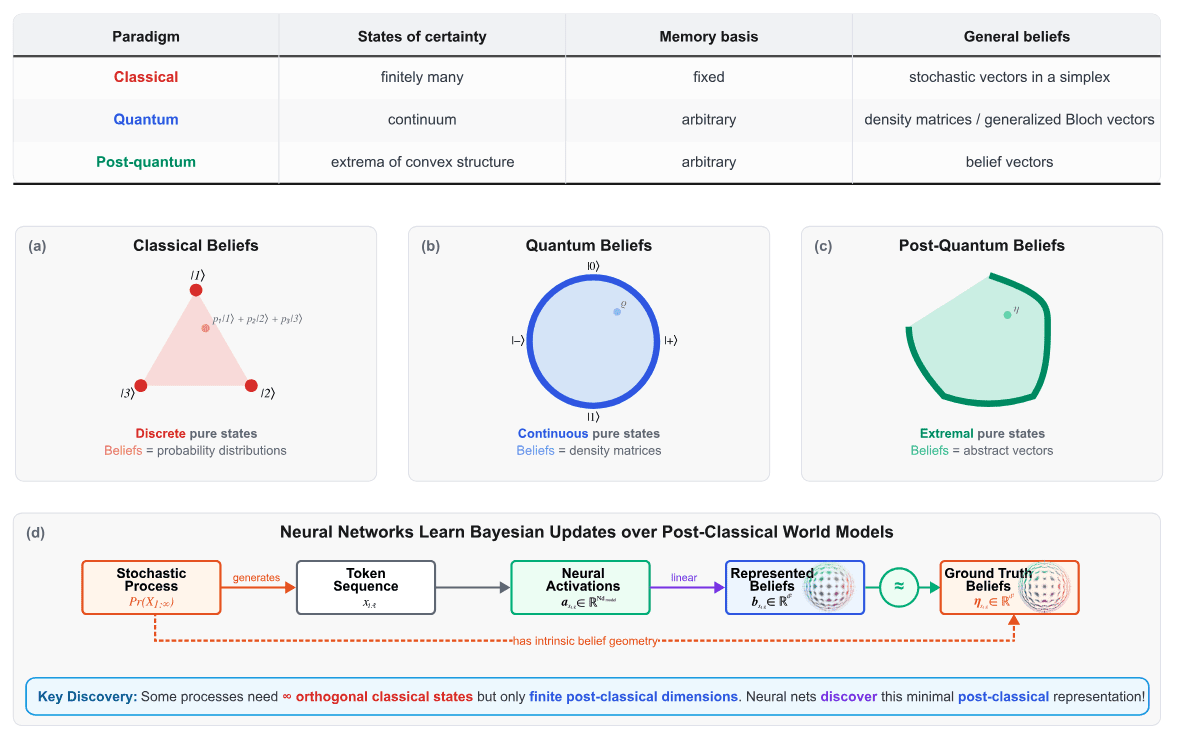

At Simplex our mission is to develop a principled science of the representations and emergent behaviors of AI systems. Our initial work showed that transformers linearly represent belief state geometries in their residual streams. We think of that work as providing the first steps into an understanding of what fundamentally we are training AI systems to do, and what representations we are training them to have.

Since that time, we have used that framework to make progress in a number of directions, which we will present in the sections below. The projects ask, and provide answers to, the following questions:

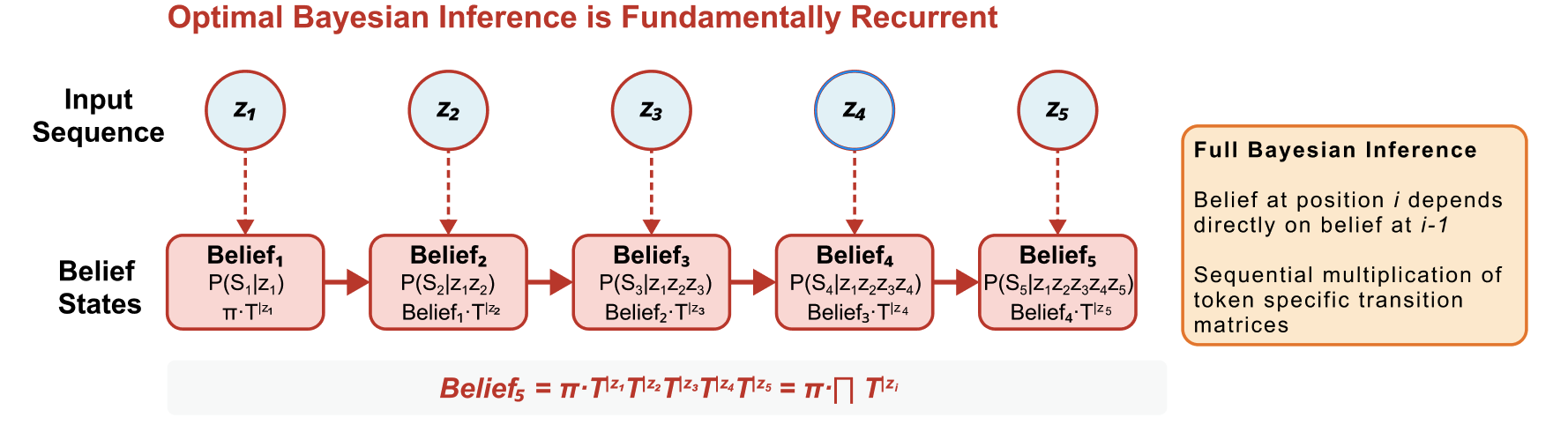

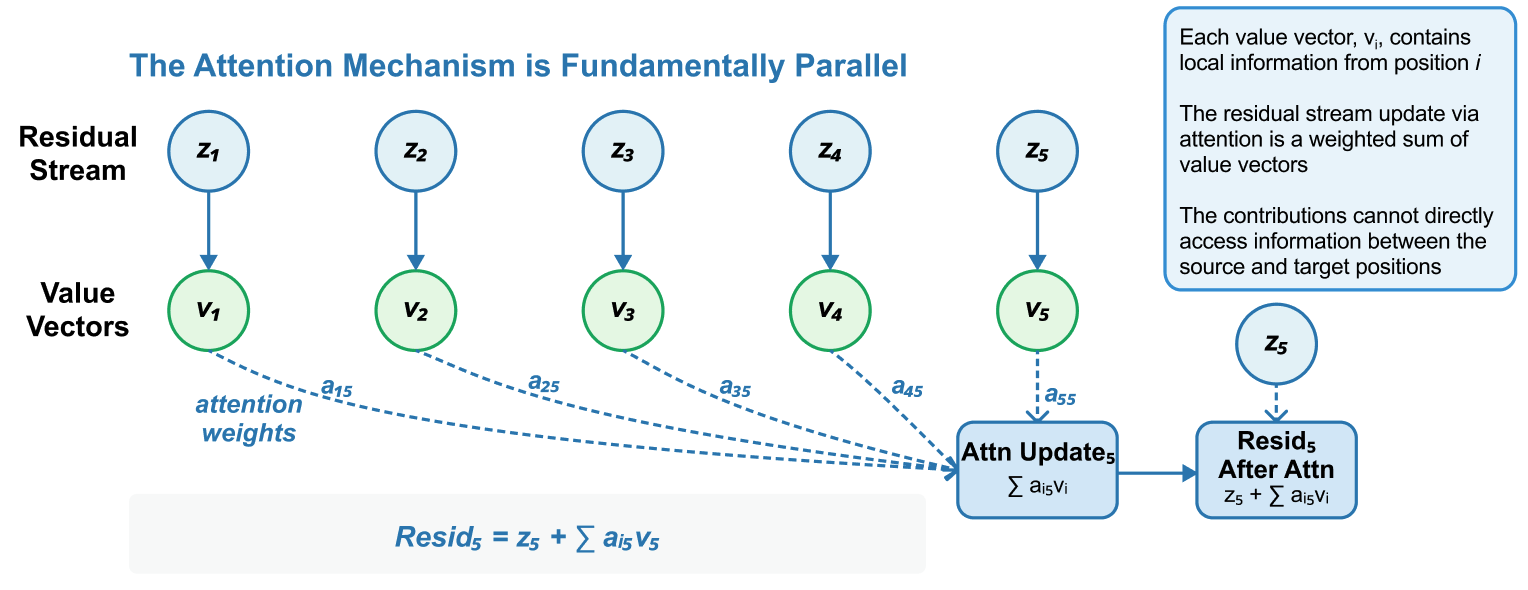

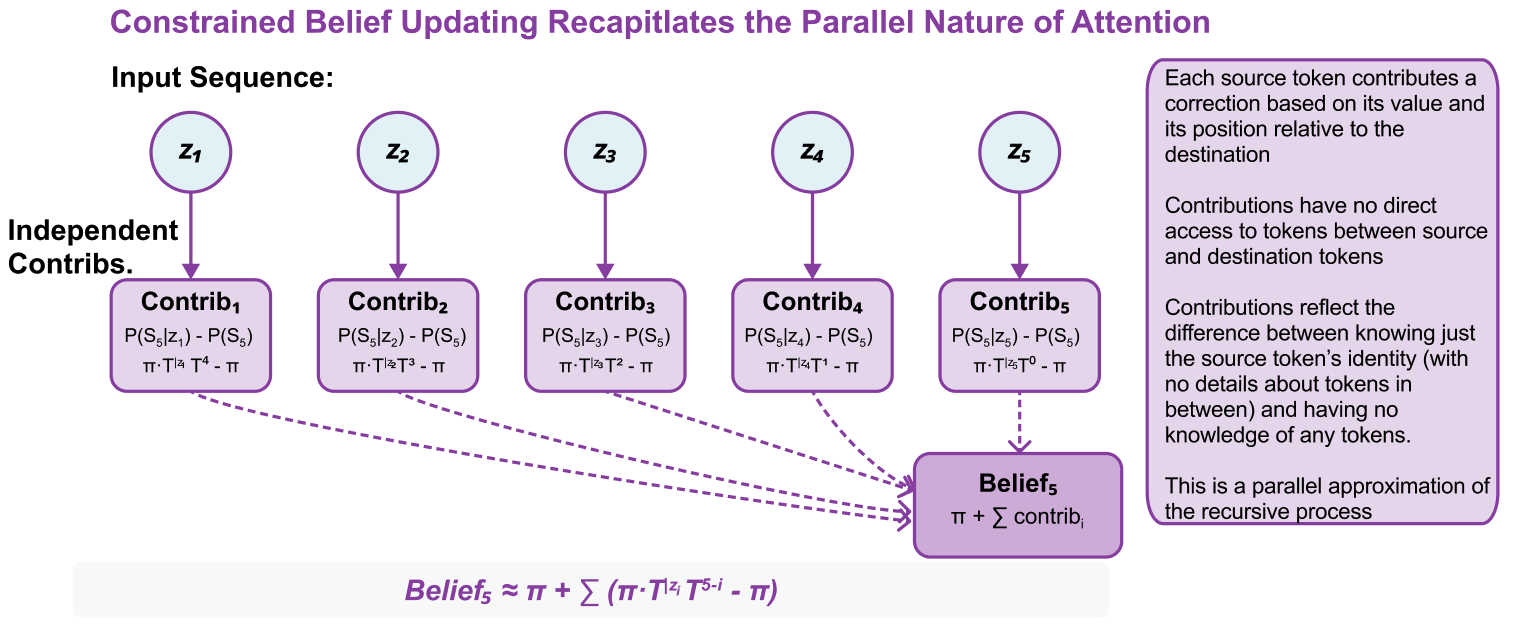

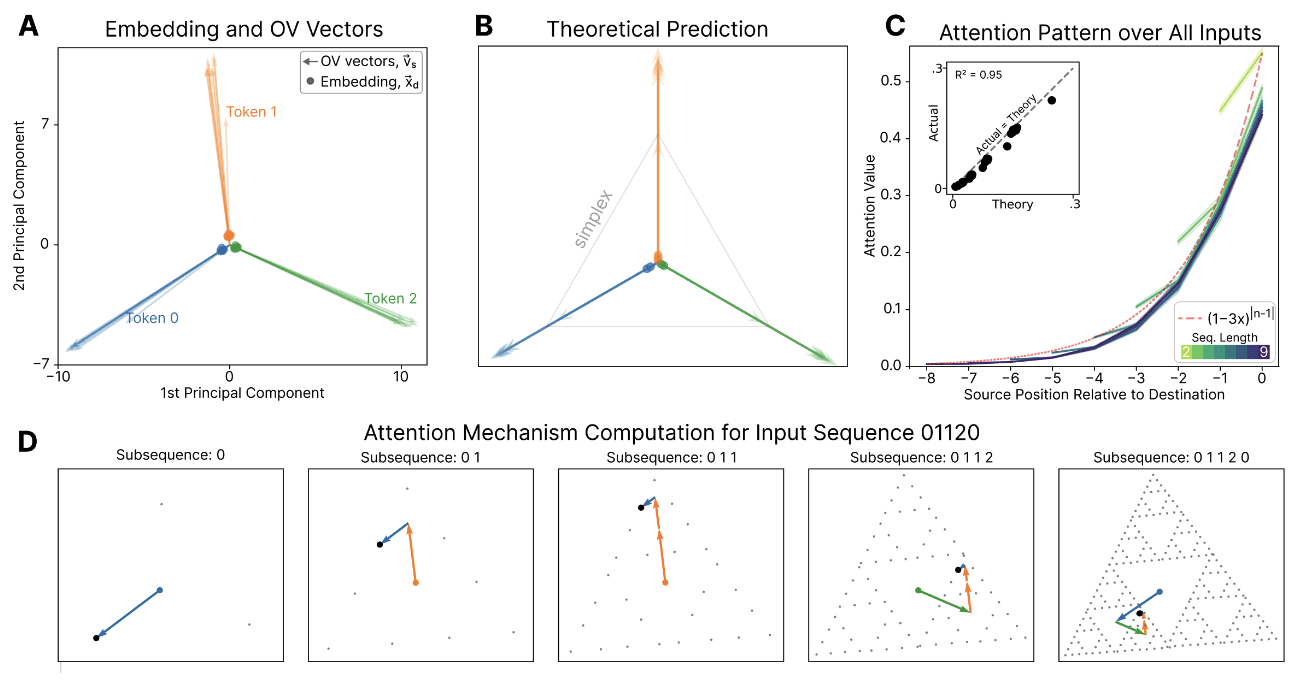

- How, mechanistically, do transformers use attention to geometrically arrange their activations according to the belief geometry?

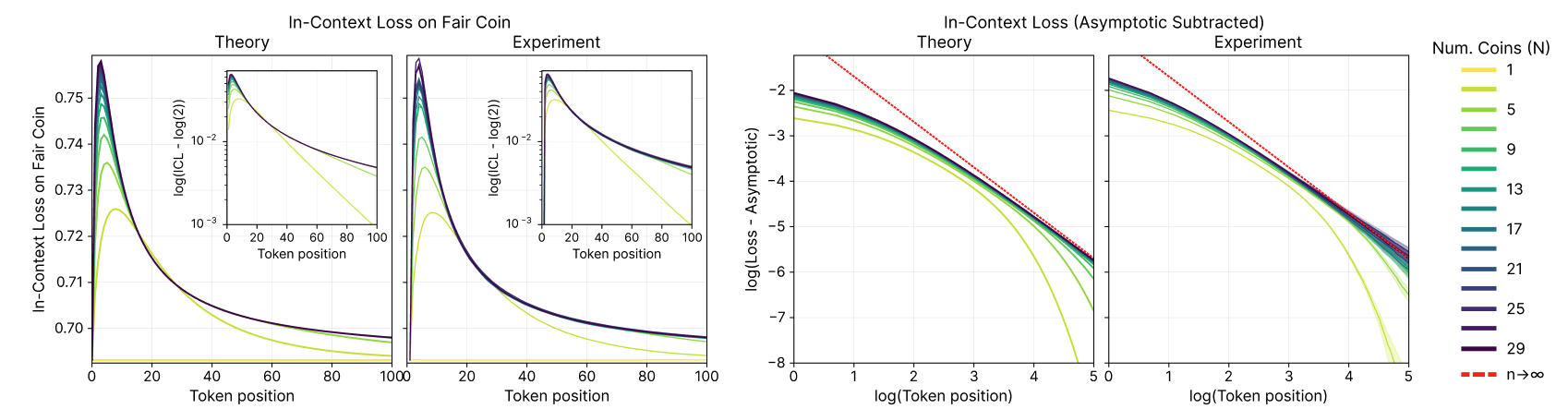

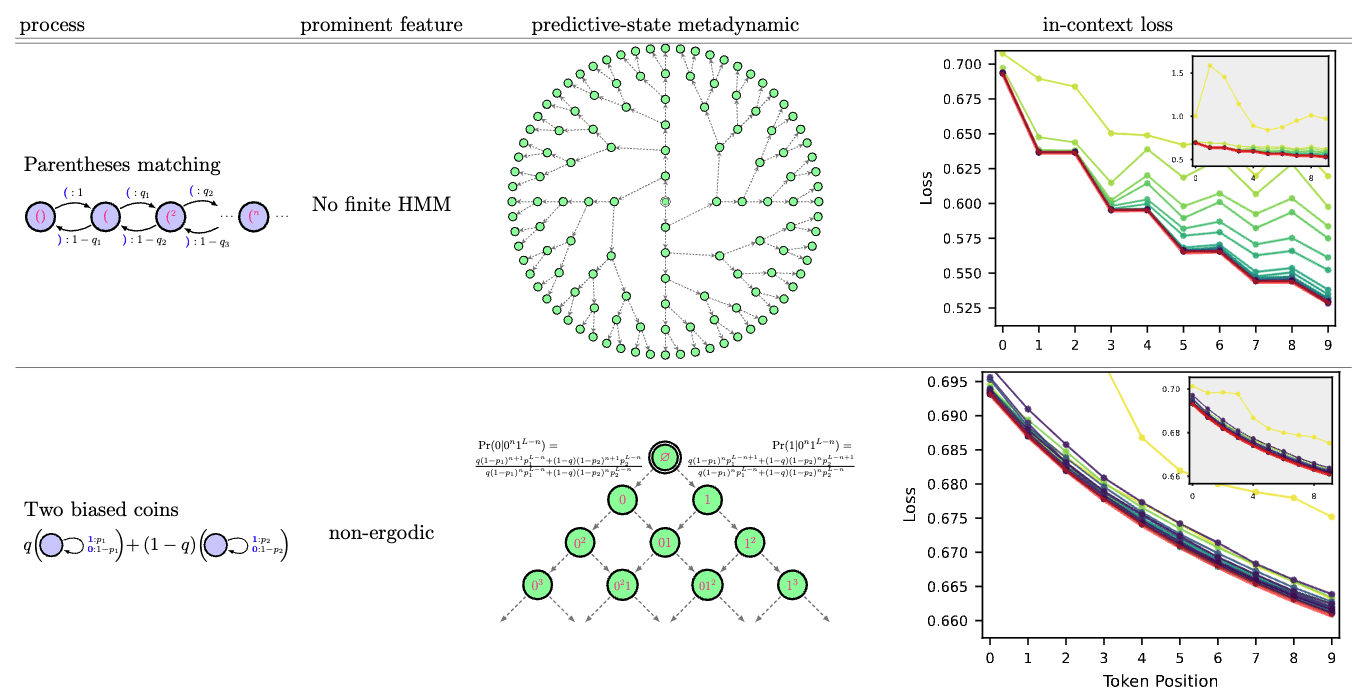

- What is the nature of in-distribution in-context learning (ICL), and how does it relate to structure in the training [...]

---

Outline:

(00:19) Introduction

(02:26) The foundational theory

(04:58) Answers to the Questions

(05:13) 1. How, mechanistically, do transformers use attention to geometrically arrange their activations according to the belief geometry?

(05:52) 2. What is the nature of in-distribution in-context learning (ICL), and how does it relate to structure in the training data?

(06:32) 3. What is the fundamental nature of computation in neural networks? What model of computation should we be thinking about when trying to make sense of these systems?

(08:05) Completed Projects

(08:09) Constrained Belief Updates Explain Geometric Structures in Transformer Representations

(15:16) Next-Token Pretraining Implies In-Context Learning

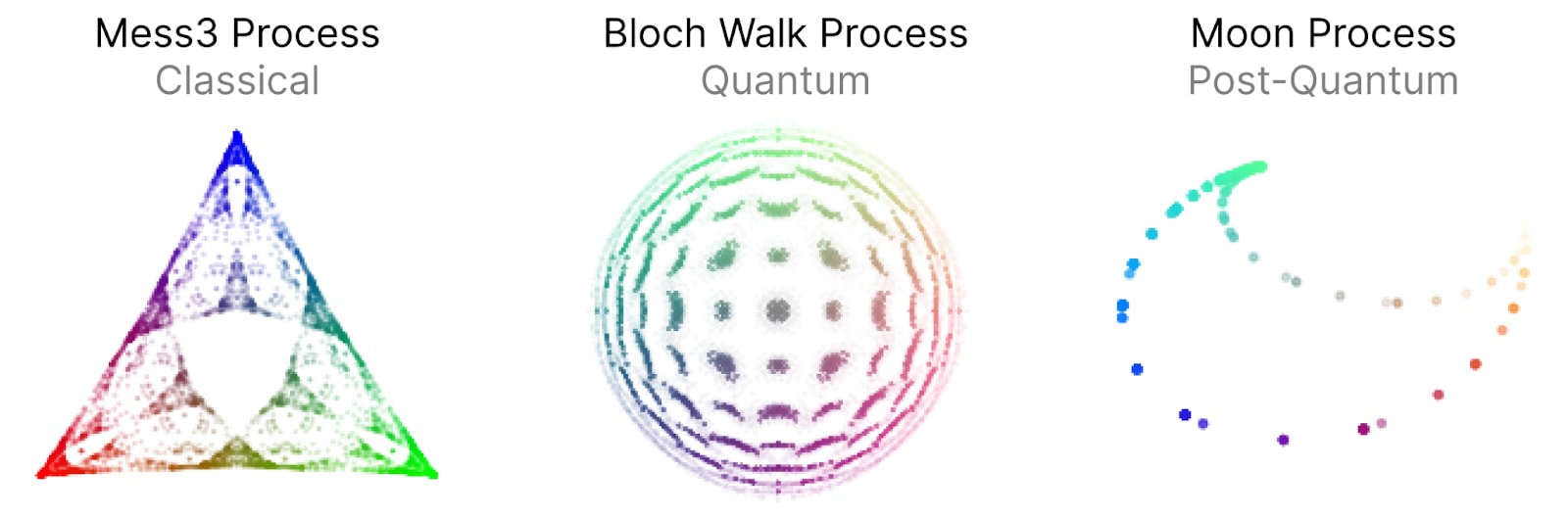

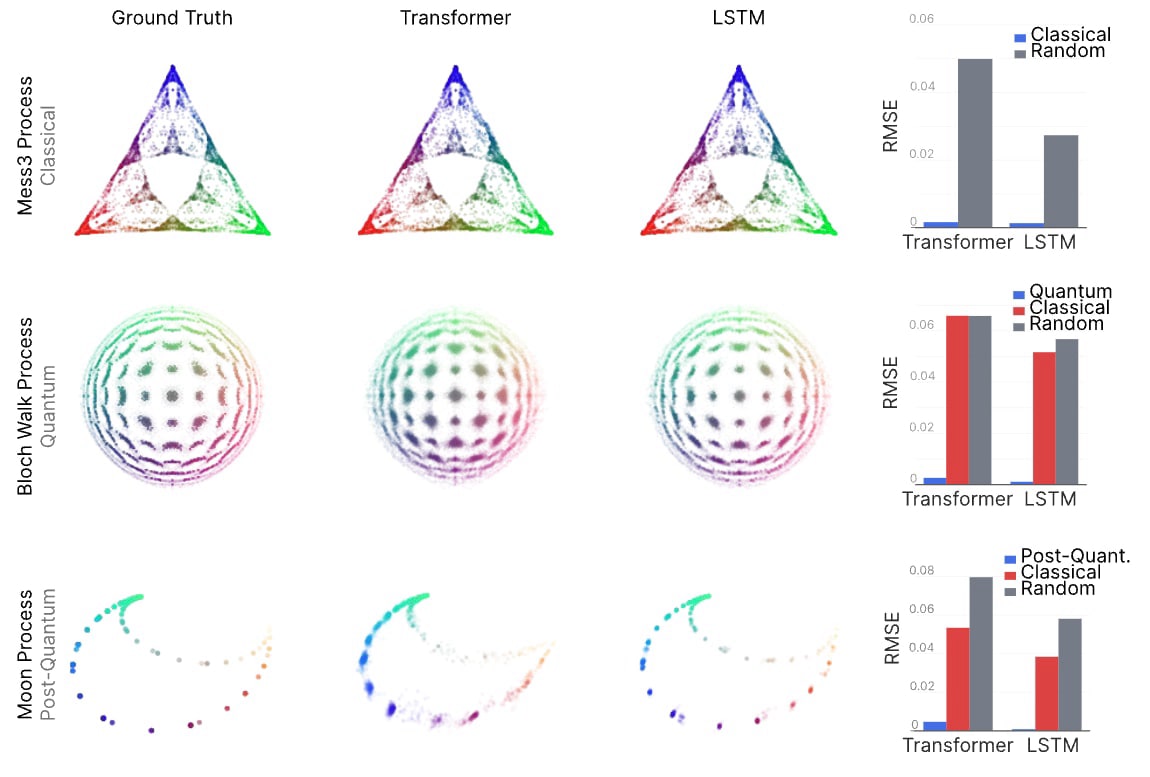

(22:55) Neural networks leverage nominally quantum and post-quantum representations

The original text contained 8 footnotes which were omitted from this narration.

---

First published:

July 28th, 2025

Source:

https://www.lesswrong.com/posts/fhkurwqhjZopx8DKK/simplex-progress-report-july-2025

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Senaste avsnitt

En liten tjänst av I'm With Friends. Finns även på engelska.