“Sparsely-connected cross-layer transcoders: preliminary findings” by jacob_drori

Audio note: this article contains 147 uses of latex notation, so the narration may be difficult to follow. There's a link to the original text in the episode description.

TLDR: I develop a method to sparsify the internal computations of a language model. My approach is to train cross-layer transcoders that are sparsely-connected: each latent depends on only a few upstream latents. Preliminary results are moderately encouraging: reconstruction error decreases with number of connections, and both latents and their connections often appear interpretable. However, both practical and conceptual challenges remain.

This work is in an early stage. If you're interested in collaborating, please reach out to jacobcd52@g***l.com.

0. Introduction

A promising line of mech interp research studies feature circuits[1]. The goal is to (1) identify representations of interpretable features in a model's latent space, and then (2) determine how earlier-layer representations combine to generate later ones. Progress [...]

---

Outline:

(01:04) 0. Introduction

(04:29) 1. Architecture

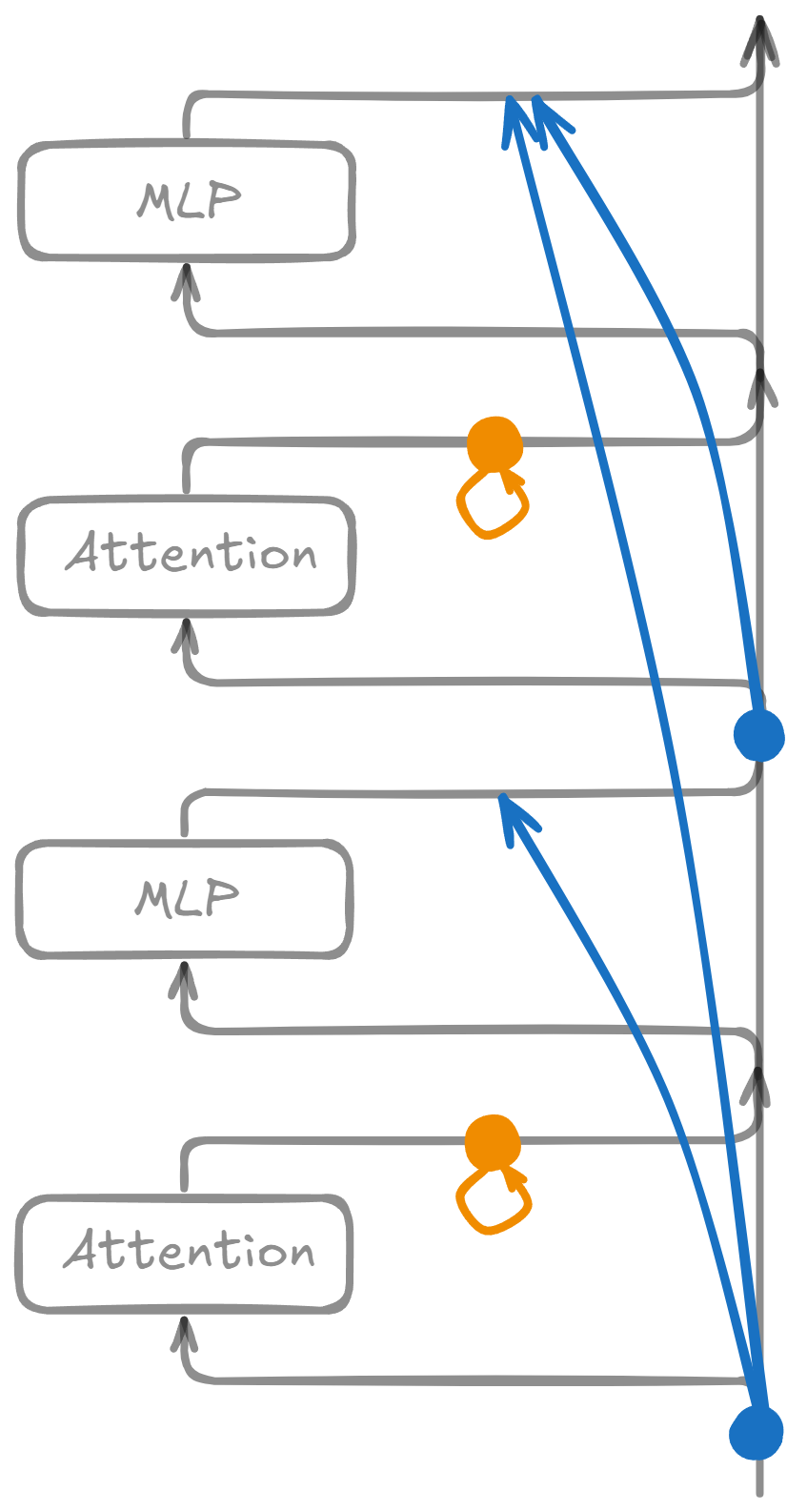

(05:05) Vanilla mode

(06:15) Sparsely-connected mode

(06:53) Virtual weights (simplified)

(09:47) Masking

(11:08) Recap

(12:21) 2. Training

(13:43) 3. Results

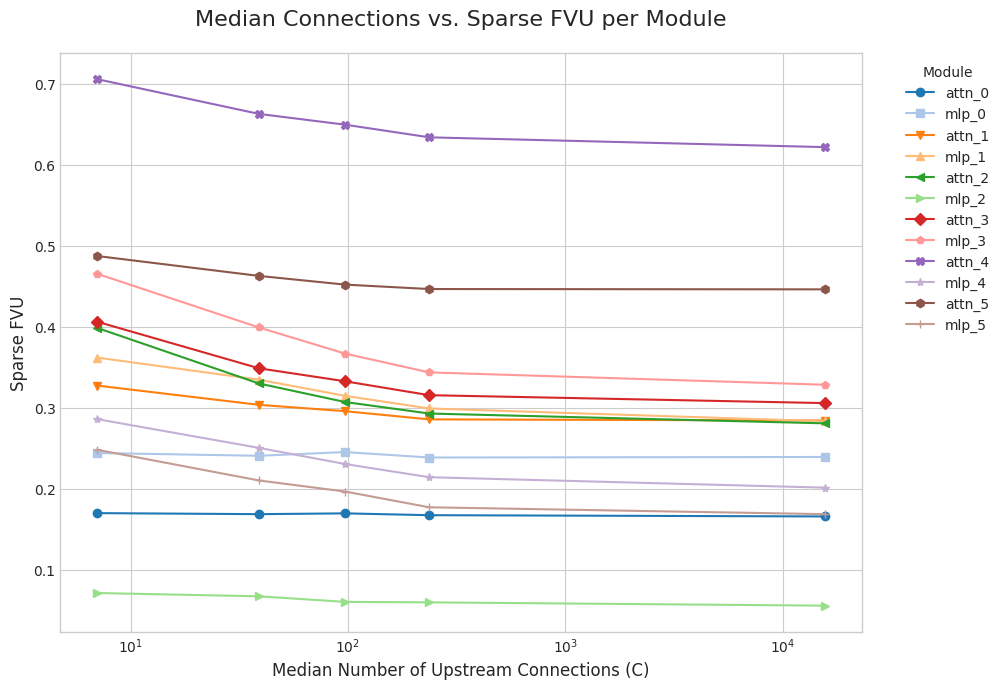

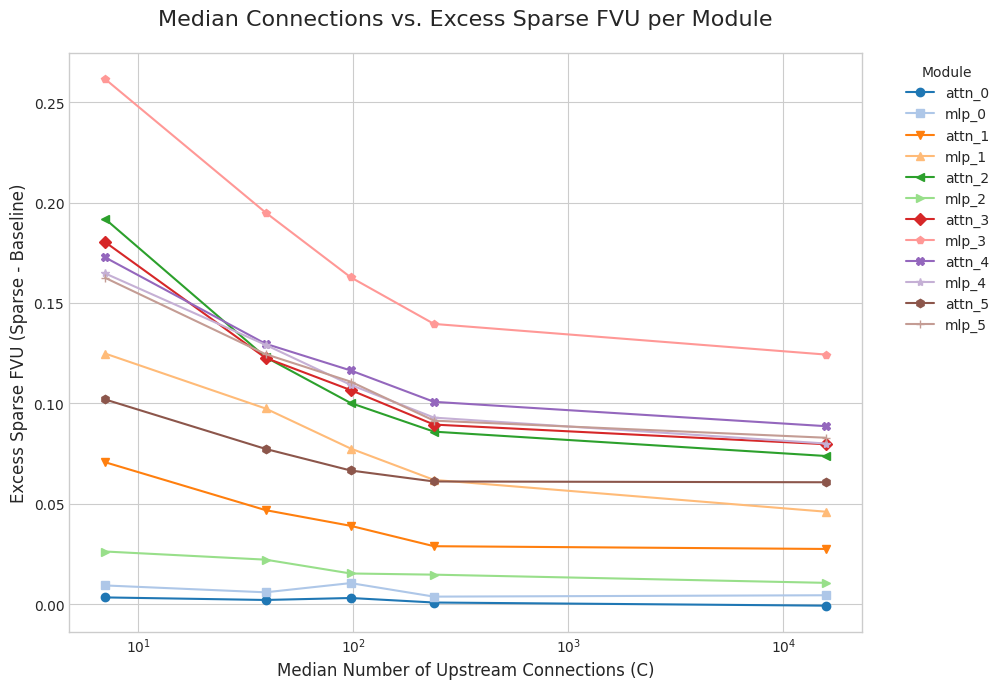

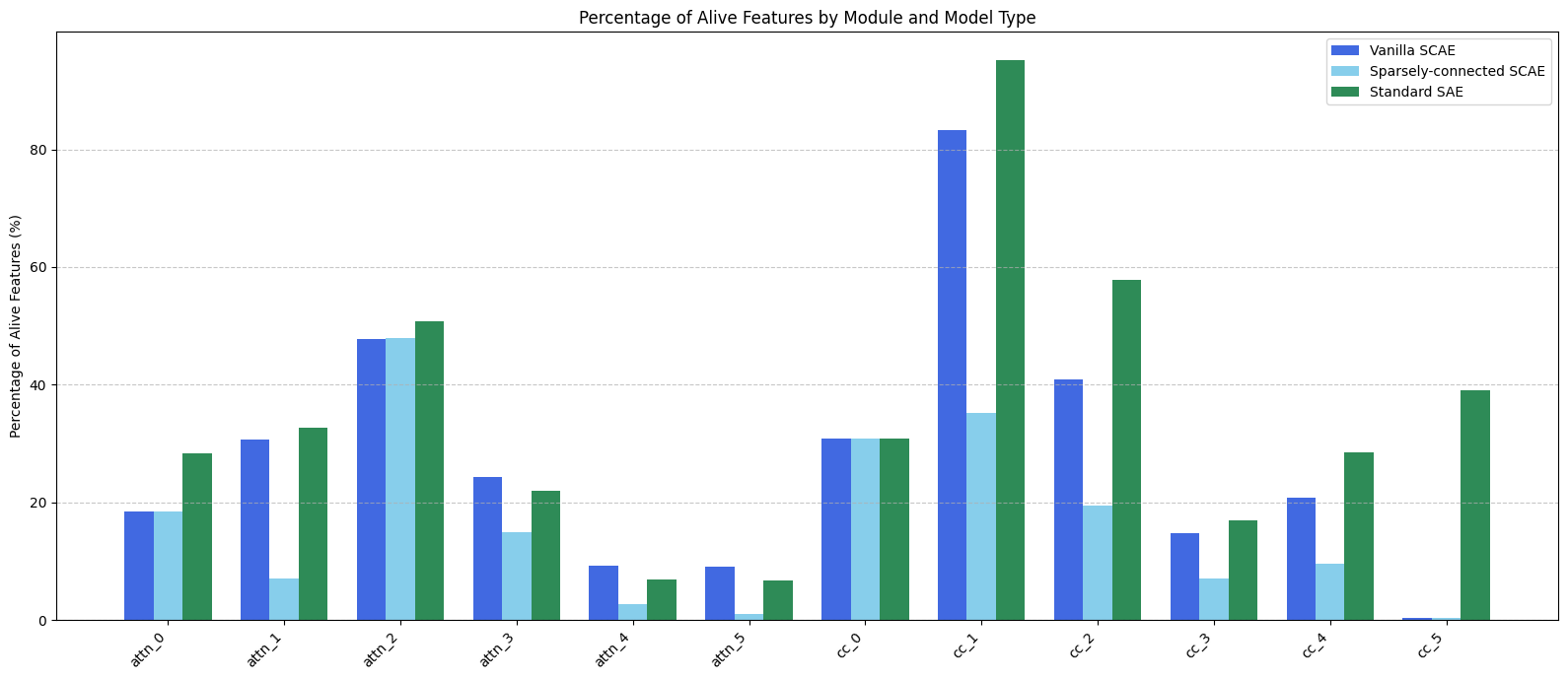

(14:23) Quantitative results

(17:00) Qualitative results

(17:55) Observations

(19:51) Dashboards

(20:13) How I updated on these results

(21:13) 4. Limitations

(21:17) Issue 1: Dead latents

(21:38) Issue 2: High excess FVU

(22:19) Issue 3: Memory

(23:43) Issue 4: Feature splitting

(25:13) 5. Conclusion

(26:07) Acknowledgements

(26:41) Appendix A: Prior work

(27:07) Circuit Tracing/Circuit Biology - Anthropic (2025)

(29:43) Jacobian SAEs - Farnik et al (2025)

(31:24) Appendix B: Virtual weights for downstream attention

The original text contained 7 footnotes which were omitted from this narration.

---

First published:

June 18th, 2025

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Senaste avsnitt

En liten tjänst av I'm With Friends. Finns även på engelska.