Daniel notes: This is a linkpost for Vitalik's post. I've copied the text below so that I can mark it up with comments.

...

Special thanks to Balvi volunteers for feedback and review

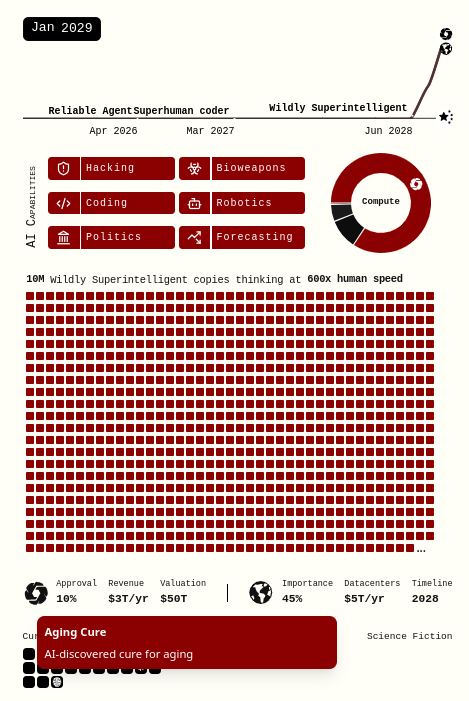

In April this year, Daniel Kokotajlo, Scott Alexander and others released what they describe as "a scenario that represents our best guess about what [the impact of superhuman AI over the next 5 years] might look like". The scenario predicts that by 2027 we will have made superhuman AI and the entire future of our civilization hinges on how it turns out: by 2030 we will get either (from the US perspective) utopia or (from any human's perspective) total annihilation.

In the months since then, there has been a large volume of responses, with varying perspectives on how likely the scenario that they presented is. For example:

- https://www.lesswrong.com/posts/gyT8sYdXch5RWdpjx/ai-2027-responses

- https://www.lesswrong.com/posts/PAYfmG2aRbdb74mEp/a-deep-critique-of-ai-2027-s-bad-timeline-models (see also: Zvi's response)

- [...]

---

Outline:

(04:24) Bio doom is far from the slam-dunk that the scenario describes

(10:16) What about combining bio with other types of attack?

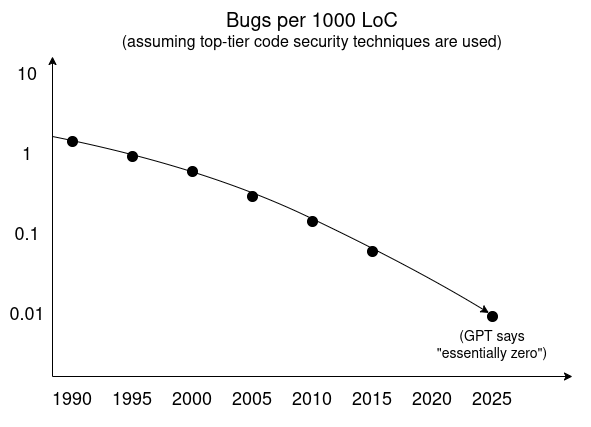

(11:51) Cybersecurity doom is also far from a slam-dunk

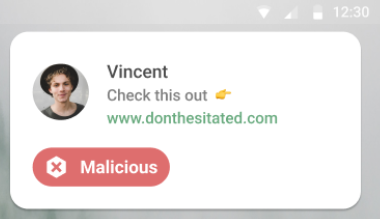

(15:29) Super-persuasion doom is also far from a slam-dunk

(18:17) Implications of these arguments

---

First published:

July 11th, 2025

Source:

https://www.lesswrong.com/posts/zuuQwueBpv9ZCpNuX/vitalik-s-response-to-ai-2027

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Senaste avsnitt

En liten tjänst av I'm With Friends. Finns även på engelska.